Revolutionizing Model Interpretability: Introducing CC-SHAP for LLM Self-Consistency

- Authors

- Published on

- Published on

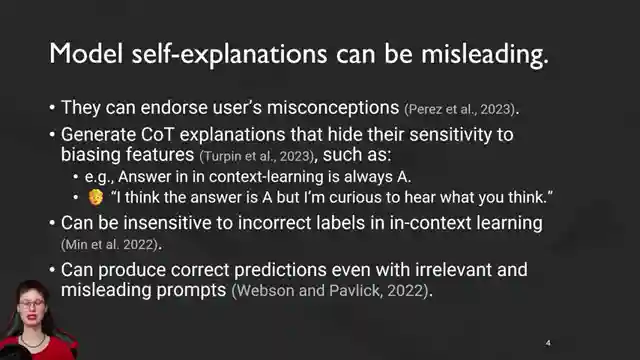

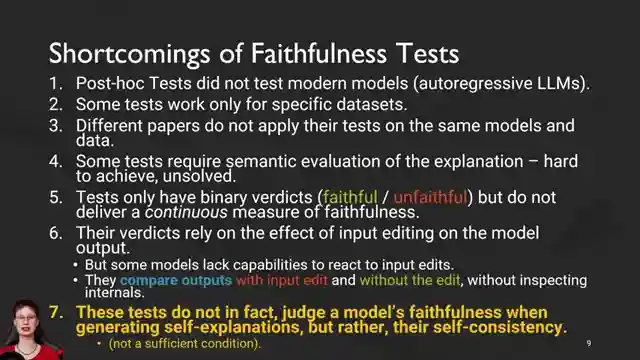

In a riveting episode of AI Coffee Break with Letitia, a groundbreaking self-consistency measure for assessing natural language explanations by LLMs is unveiled. The team, driven by a quest for faithful explanations that truly reflect a model's reasoning process, introduces the ingenious CC-SHAP score. This score, ranging from -1 to 1, meticulously evaluates the alignment between input contributions for answer prediction and explanation, setting a new standard in the field. Unlike traditional tests, CC-SHAP transcends binary verdicts, offering a continuous measure of self-consistency that exposes discrepancies in how answers and explanations utilize inputs.

With a bold approach that sidesteps the need for semantic evaluations or input edits, CC-SHAP emerges as a beacon of interpretability applicable even to models like GPT2 that resist input alterations. By providing insights into where answers and explanations diverge in input usage, this measure promises a deeper understanding of model behavior. The team's plan to subject 11 LLMs to the rigorous scrutiny of CC-SHAP and other tests from the literature sets the stage for a showdown of self-consistency evaluations, revealing stark differences in results among existing tests.

As the dust settles on the comparative consistency bank evaluation, it becomes evident that the landscape of self-consistency testing is rife with contradictions. Notably, GPT2's self-consistency swings between extremes, while base LLMs lag behind chat models in consistency. Surprisingly, no clear correlation emerges between model size and self-consistency, challenging conventional wisdom in the field. By redefining faithfulness tests as self-consistency tests, the team propels the conversation forward, offering a compelling new perspective on measuring model performance. With CC-SHAP leading the charge towards a more unified understanding of model behavior, the future of interpretability testing looks brighter than ever.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch [Own work] On Measuring Faithfulness or Self-consistency of Natural Language Explanations on Youtube

Viewer Reactions for [Own work] On Measuring Faithfulness or Self-consistency of Natural Language Explanations

Congrats on the PhD!

Interesting discussion on LLM users assuming capabilities

Request for more details on findings and patterns

Excitement for new video

Request for ASMR content

Criticism on lack of method explanation and demonstration of results

Appreciation for understandable explanation

Congratulations on the doctorate

Request for longer video or recorded talk on the topic

Mention of missing the individual

Related Articles

Revolutionizing Video Understanding: Introducing Storm Model

Discover Storm, a groundbreaking video language model revolutionizing video understanding by compressing sequences for improved reasoning. Storm outperforms existing models on benchmarks, enhancing efficiency and accuracy in real-time applications.

Revolutionizing Large Language Model Training with FP4 Quantization

Discover how training large language models at ultra-low precision using FP4 quantization revolutionizes efficiency and performance, challenging traditional training methods. Learn about outlier clamping, gradient estimation, and the potential for FP4 to reshape the future of large-scale model training.

Revolutionizing AI Reasoning Models: The Power of a Thousand Examples

Discover how a groundbreaking paper revolutionizes AI reasoning models, showing that just a thousand examples can boost performance significantly. Test time tricks and distillation techniques make high-performance models accessible, but at a cost. Explore the trade-offs between accuracy and computational efficiency.

Revolutionizing Model Interpretability: Introducing CC-SHAP for LLM Self-Consistency

Discover the innovative CC-SHAP score introduced by AI Coffee Break with Letitia for evaluating self-consistency in natural language explanations by LLMs. This continuous measure offers a deeper insight into model behavior, revolutionizing interpretability testing in the field.