Revolutionizing Large Language Model Training with FP4 Quantization

- Authors

- Published on

- Published on

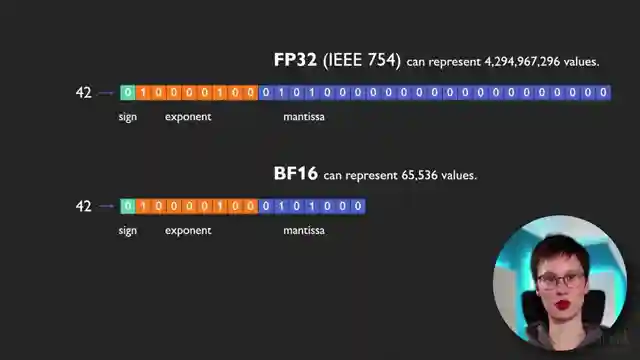

In this exhilarating episode from AI Coffee Break with Letitia, the team delves into the thrilling world of training large language models at low precision. They explore a groundbreaking new paper that pushes the boundaries of what was once deemed possible in ultra-low precision training. Imagine squeezing each weight and activation into just four bits during massive LLM training, achieving performance levels that rival 16-bit precision. It's a game-changer, a paradigm shift that promises faster, cheaper, and greener training methods for these behemoth models.

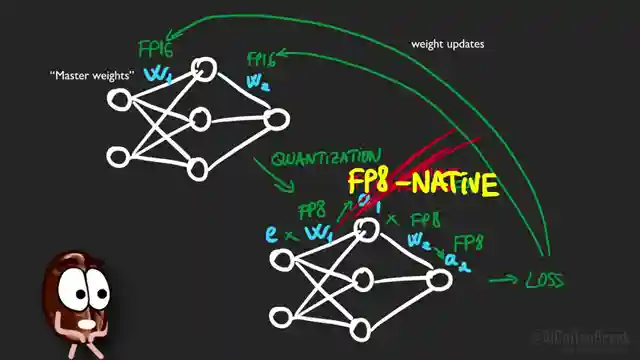

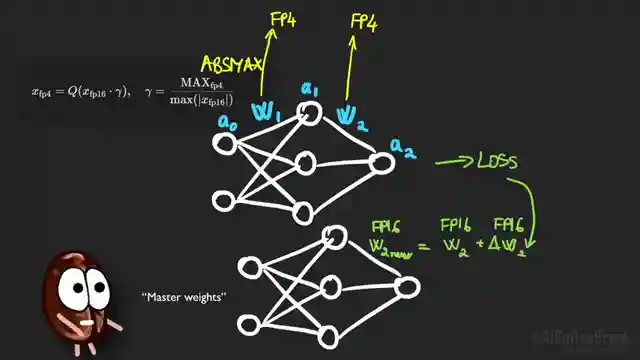

The heart of this revolutionary approach lies in optimizing large language model training using FP4 quantization. By crunching numbers in FP4 during matrix multiplications, the team unlocks a realm of speed and efficiency previously unattainable. But it's not all smooth sailing - reducing precision to FP4 introduces quantization errors that could spell disaster. However, through a series of ingenious strategies, the researchers manage to not only make FP4 training work but also outperform 16-bit precision in real-world benchmarks.

The adrenaline doesn't stop there - the authors tackle the challenge of quantizing activations, a feat that proves to be even more daunting than dealing with weights. By implementing outlier clamping and compensation techniques, they wrangle the unruly activations into submission, ensuring stability and accuracy in the training process. To top it off, a differentiable gradient estimator comes into play during the backward pass, revolutionizing gradient estimation and paving the way for ultra-low precision training to shine. The results speak for themselves - competitive accuracy levels with 16-bit precision, setting the stage for a future where FP4 could reign supreme in the world of large-scale model training.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch 4-Bit Training for Billion-Parameter LLMs? Yes, Really. on Youtube

Viewer Reactions for 4-Bit Training for Billion-Parameter LLMs? Yes, Really.

Viewer excited to learn more about the topic covered in the video

Positive feedback on the video and surprise at the results obtained with low precision

Curiosity about storing unquantized master copies in RAM

Speculation about switching from multiplication to table lookup

Interest in further reducing precision to 2 bit or 1 bit

Question about the computation ratio between backward and forward pass

Mixed opinions on the practicality and effectiveness of the method

Concerns about trading off precision for efficiency in large language models

Mention of testing results in Portuguese

Mention of ethical concerns and lack of connection to the research subject in the paper

Related Articles

Revolutionizing Video Understanding: Introducing Storm Model

Discover Storm, a groundbreaking video language model revolutionizing video understanding by compressing sequences for improved reasoning. Storm outperforms existing models on benchmarks, enhancing efficiency and accuracy in real-time applications.

Revolutionizing Large Language Model Training with FP4 Quantization

Discover how training large language models at ultra-low precision using FP4 quantization revolutionizes efficiency and performance, challenging traditional training methods. Learn about outlier clamping, gradient estimation, and the potential for FP4 to reshape the future of large-scale model training.

Revolutionizing AI Reasoning Models: The Power of a Thousand Examples

Discover how a groundbreaking paper revolutionizes AI reasoning models, showing that just a thousand examples can boost performance significantly. Test time tricks and distillation techniques make high-performance models accessible, but at a cost. Explore the trade-offs between accuracy and computational efficiency.

Revolutionizing Model Interpretability: Introducing CC-SHAP for LLM Self-Consistency

Discover the innovative CC-SHAP score introduced by AI Coffee Break with Letitia for evaluating self-consistency in natural language explanations by LLMs. This continuous measure offers a deeper insight into model behavior, revolutionizing interpretability testing in the field.