Python Wikipedia Rack System Tutorial with Llama Index on NeuralNine

- Authors

- Published on

- Published on

Today, on NeuralNine, we embark on a thrilling adventure to construct a Wikipedia-powered rack system in Python. This system, fueled by llama index, promises to revolutionize the way we interact with information. Forget the tedious manual labor - llama index handles the heavy lifting, effortlessly retrieving key Wikipedia articles and generating context-based answers to our burning questions. It's like having a virtual encyclopedia at your fingertips, ready to provide knowledge at a moment's notice.

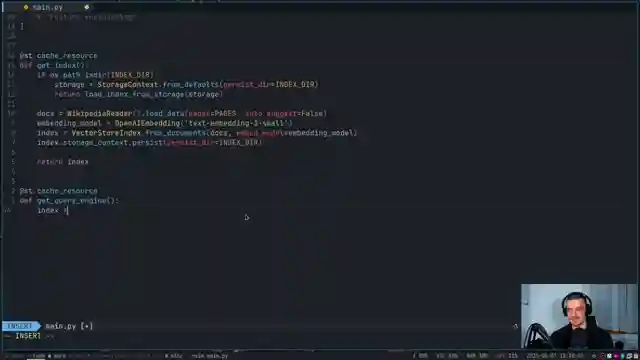

The process is elegantly simple yet profoundly impactful. We handpick select Wikipedia articles, transform them into high-dimensional vectors, and let llama index work its magic behind the scenes. No need to break a sweat over data processing - llama index streamlines the entire operation, making it accessible even to beginners. With just a few lines of code, we're on our way to building a powerful rack system that harnesses the wealth of information available on Wikipedia.

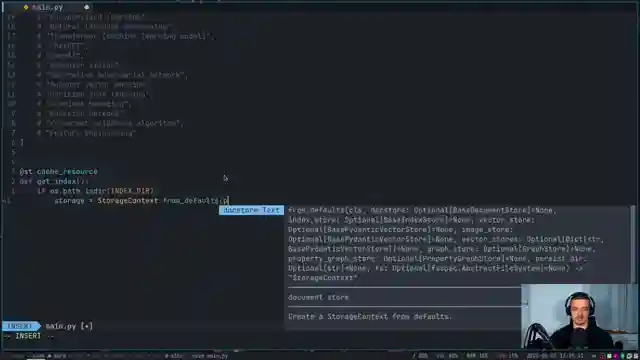

Installation is a breeze, with packages like streamlit and llama index at our disposal. For added security, python-dot-en allows us to load API keys discreetly, ensuring our data remains protected. The team at NeuralNine walks us through the essential dependencies, including llama index embeddings and readers like Wikipedia. By following these steps, we pave the way for a seamless integration of Wikipedia knowledge into our rack system.

With the index created and the query engine set up, we dive into building the user interface. Through streamlit, we craft a sleek design that invites users to ask questions and receive instant, context-rich answers. The simplicity and efficiency of llama index shine through as we witness the power of automation in action. This tutorial is not just about building a rack system; it's about embracing a new era of information retrieval, where technology does the heavy lifting, and we reap the rewards.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Wikipedia RAG System in Python - Beginner Tutorial with LlamaIndex on Youtube

Viewer Reactions for Wikipedia RAG System in Python - Beginner Tutorial with LlamaIndex

LLM provides more structured answers when a prompt template is used

LLM is trained on wiki

Related Articles

Building Stock Prediction Tool: PyTorch, Fast API, React & Warp Tutorial

NeuralNine constructs a stock prediction tool using PyTorch, Fast API, React, and Warp. The tutorial showcases training the model, building the backend, and deploying the application with Docker. Witness the power of AI in predicting stock prices with this comprehensive guide.

Exploring Arch Linux: Customization, Updates, and Troubleshooting Tips

NeuralNine explores the switch to Arch Linux for cutting-edge updates and customization, detailing the manual setup process, troubleshooting tips, and the benefits of the Arch User Repository.

Master Application Monitoring: Prometheus & Graphfana Tutorial

Learn to monitor applications professionally using Prometheus and Graphfana in Python with NeuralNine. This tutorial guides you through setting up a Flask app, tracking metrics, handling exceptions, and visualizing data. Dive into the world of application monitoring with this comprehensive guide.

Mastering Logistic Regression: Python Implementation for Precise Class Predictions

NeuralNine explores logistic regression, a classification algorithm revealing probabilities for class indices. From parameters to sigmoid functions, dive into the mathematical depths for accurate predictions in Python.