Mastering Logistic Regression: Python Implementation for Precise Class Predictions

- Authors

- Published on

- Published on

Today, on the thrilling episode by NeuralNine, we witness the daring implementation of logistic regression from scratch in Python. This endeavor plunges us into the deep, technical abyss of mathematics, where the team fearlessly unravels the inner workings of this classification algorithm. Logistic regression, a mischievously named beast, reveals its true nature as a predictor of class indices through probabilities rather than concrete values. The team embarks on a quest to understand the mathematical sorcery behind this transformation from raw data to precise predictions.

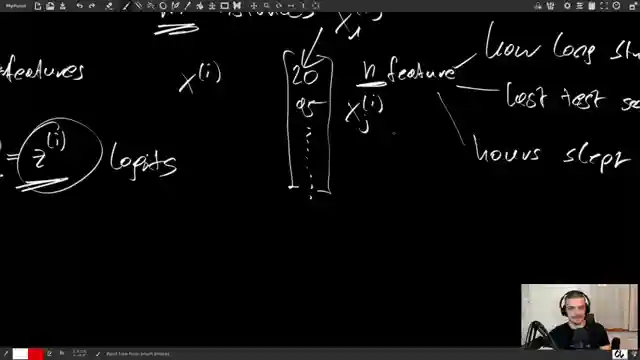

With a swagger befitting the task at hand, NeuralNine dives headfirst into the realm of parameters and logits, where input features are cunningly manipulated to yield probabilities using the enigmatic sigmoid function. This function, like a master illusionist, coerces the outputs to dance between 0 and 1, painting a vivid picture of the likelihood of a given outcome. As the team deftly navigates the treacherous waters of optimization, the quest for the perfect parameters unfolds, driven by a thirst for accuracy and precision in the realm of binary classification.

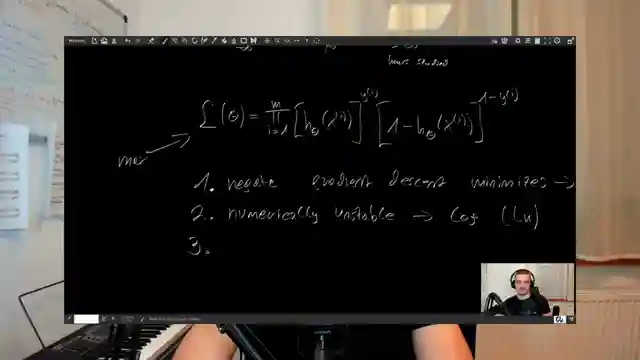

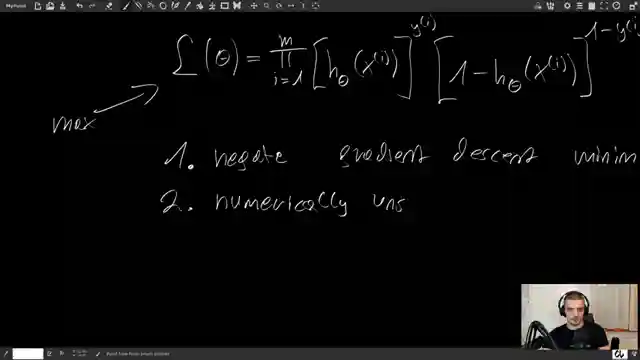

The adrenaline-fueled pursuit of optimal parameters takes NeuralNine on a heart-pounding journey through the wilderness of likelihood functions. Armed with the power of the sigmoid output and the truth of classifications, the team forges ahead, aiming to maximize the likelihood of parameter perfection. This high-stakes game of cat and mouse between the model and the data propels the team towards the ultimate goal of finely-tuned parameters that can deftly predict the probabilities of success or failure with unwavering accuracy. In the realm of logistic regression, where numbers dance and probabilities reign supreme, NeuralNine emerges as the valiant hero, unraveling the mysteries of classification with unmatched prowess and determination.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Logistic Regression From Scratch in Python (Mathematical) on Youtube

Viewer Reactions for Logistic Regression From Scratch in Python (Mathematical)

Re-uploaded video due to a misplaced clip

Request for more machine learning models from scratch

Excitement for upcoming ML series

Clarification on regression and classification terms

Logistic regression predicts probability for class distinction

Probabilities between 0 and 1 used for classification

Thresholding for classification

Modeling continuous outcome with regression framework

Fitting coefficients via maximum likelihood estimation

Clarification that "regression" refers to model structure, not output type

Related Articles

Building Stock Prediction Tool: PyTorch, Fast API, React & Warp Tutorial

NeuralNine constructs a stock prediction tool using PyTorch, Fast API, React, and Warp. The tutorial showcases training the model, building the backend, and deploying the application with Docker. Witness the power of AI in predicting stock prices with this comprehensive guide.

Exploring Arch Linux: Customization, Updates, and Troubleshooting Tips

NeuralNine explores the switch to Arch Linux for cutting-edge updates and customization, detailing the manual setup process, troubleshooting tips, and the benefits of the Arch User Repository.

Master Application Monitoring: Prometheus & Graphfana Tutorial

Learn to monitor applications professionally using Prometheus and Graphfana in Python with NeuralNine. This tutorial guides you through setting up a Flask app, tracking metrics, handling exceptions, and visualizing data. Dive into the world of application monitoring with this comprehensive guide.

Mastering Logistic Regression: Python Implementation for Precise Class Predictions

NeuralNine explores logistic regression, a classification algorithm revealing probabilities for class indices. From parameters to sigmoid functions, dive into the mathematical depths for accurate predictions in Python.