Unlocking Advanced AI Locally: Ollama Integration for Developers

- Authors

- Published on

- Published on

In the thrilling world of technology, IBM Technology showcases the exhilarating possibility of running cutting-edge language models right from your own laptop. With Ollama, a tool rapidly gaining traction among developers, you can unleash the full power of optimized models for tasks like code assistance and AI integration without relying on cloud services. This means you get to keep your data under lock and key, away from prying eyes, while still enjoying the seamless experience of tapping into advanced AI capabilities.

By taking us on a riveting journey through the installation process, IBM Technology reveals the game-changing value Ollama brings to the table. No longer do developers have to jump through hoops to access hefty computing resources or surrender their data to external parties. Instead, they can wield the might of large language models right from their local machines, maintaining a tight grip on the reins of their AI endeavors. With a command line tool available for Mac, Windows, and Linux, along with a treasure trove of models to explore, Ollama opens up a world of possibilities for tech enthusiasts.

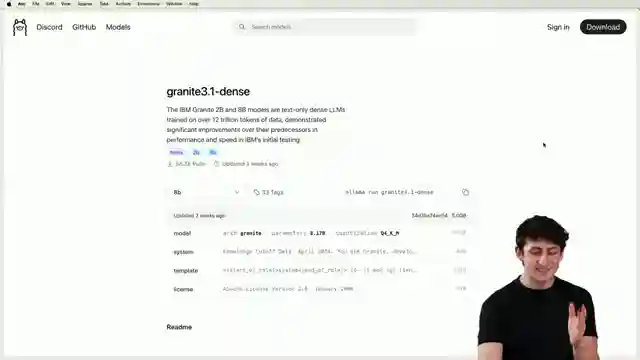

Through a captivating demonstration, IBM Technology showcases the seamless integration of Ollama into the developer's workflow. From downloading and chatting with models to exploring task-specific options like code assistants, the process unfolds with the precision of a well-oiled machine. The granite 3.1 model, with its support for multiple languages and enterprise-specific tasks, stands out as a beacon of innovation in the vast sea of AI offerings. And let's not forget the rich Ollama model catalog, brimming with models for various applications, offering developers a playground to unleash their creativity.

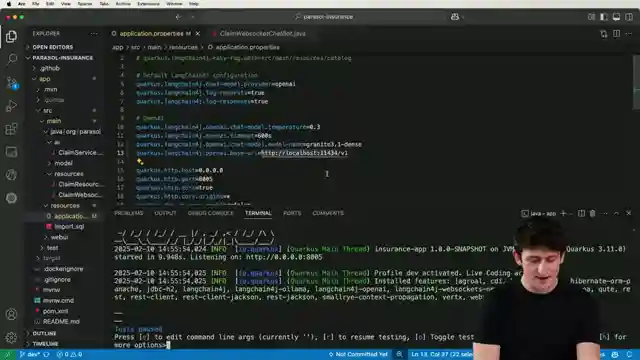

As the journey continues, IBM Technology delves into the crucial aspect of integrating local language models into existing applications. By leveraging Langchain for Java and the robust capabilities of Quarkus, developers can seamlessly communicate with models in a standardized format, revolutionizing the way AI is harnessed in their projects. With a focus on enhancing AI capabilities within applications, IBM Technology paints a vivid picture of how developers can harness the power of Ollama to streamline processes and boost efficiency. In the fast-paced world of technology, Ollama emerges as a beacon of hope for developers seeking to explore the vast landscape of AI with confidence and control.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Run AI Models Locally with Ollama: Fast & Simple Deployment on Youtube

Viewer Reactions for Run AI Models Locally with Ollama: Fast & Simple Deployment

IBM's use of AI is impressive

The presentation on Ollama and integrating with other tools is well-received

Positive feedback on the explanation provided in the video

Suggestion for creating Data AI Management Systems like DB2 with AI Open Connectivity Driver

Emphasis on the importance of a core unified industrial framework for boosting the AI industry

Related Articles

Mastering Identity Propagation in Agentic Systems: Strategies and Challenges

IBM Technology explores challenges in identity propagation within agentic systems. They discuss delegation patterns and strategies like OAuth 2, token exchange, and API gateways for secure data management.

AI vs. Human Thinking: Cognition Comparison by IBM Technology

IBM Technology explores the differences between artificial intelligence and human thinking in learning, processing, memory, reasoning, error tendencies, and embodiment. The comparison highlights unique approaches and challenges in cognition.

AI Job Impact Debate & Market Response: IBM Tech Analysis

Discover the debate on AI's impact on jobs in the latest IBM Technology episode. Experts discuss the potential for job transformation and the importance of AI literacy. The team also analyzes the market response to the Scale AI-Meta deal, prompting tech giants to rethink data strategies.

Enhancing Data Security in Enterprises: Strategies for Protecting Merged Data

IBM Technology explores data utilization in enterprises, focusing on business intelligence and AI. Strategies like data virtualization and birthright access are discussed to protect merged data, ensuring secure and efficient data access environments.