Revolutionizing Sentiment Analysis: KNN vs. Bert with Gzip Compression

- Authors

- Published on

- Published on

Today on sentdex, we delve into a revolutionary text classification approach that takes on the mighty Bert in sentiment analysis. Using the humble K nearest neighbors and the trusty gzip for compression, this method challenges the status quo with its simplicity and efficiency. By compressing text and utilizing normalized compression distances as feature vectors, the algorithm offers a fresh perspective on tackling machine learning tasks.

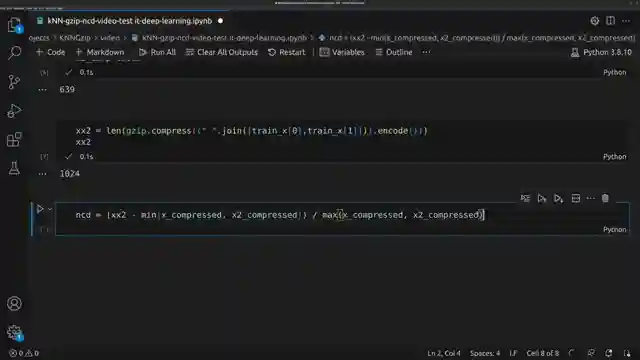

The process involves converting text into numbers through compression, normalizing these values for comparison, and calculating NCD for all training samples. The channel explores the practical implementation of this technique, showcasing its potential in real-world applications. Through meticulous testing and tweaking, the team uncovers the nuances of this approach, highlighting its strengths and areas for improvement.

With a keen eye on performance optimization, multiprocessing is introduced to expedite the NCD calculations for each sample pair. This innovation not only enhances efficiency but also sets the stage for scaling up the method to handle larger datasets. The results speak volumes, with the algorithm achieving a commendable 75.7% accuracy on a substantial 10,000 sample dataset, albeit falling slightly short of the original paper's reported accuracy.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Gzip is all You Need! (This SHOULD NOT work) on Youtube

Viewer Reactions for Gzip is all You Need! (This SHOULD NOT work)

The compression distance is constructed as an approximate measure of mutual information between strings, with similar strings yielding a smaller NCD.

Gzip is used to find the "distance" between two strings, with the method explained through equations.

The method involves compressing two texts individually, then combining and compressing them to determine similarity.

Compression algorithms produce longer results with more variation, impacting sentiment analysis.

Text compression is closely related to AI, as seen in competitions like the Hutter Prize.

The method involves using normalized compression distances (NCD) as features for sentiment analysis, outperforming random classification.

The video explores the unexpected success of the method and its implications for NLP tasks.

The approach challenges the dominance of deep learning, emphasizing the value of revisiting first principles.

The method involves calculating NCD vectors against training samples and using K nearest neighbors for sentiment classification.

There are questions about the method's validity, potential problems, and the sufficiency of NCDs alone for sentiment classification.

Related Articles

Robotic Hand Control Innovations: Challenges and Solutions

Jeff the G1 demonstrates precise robotic hand control using a keyboard. Challenges with SDK limitations lead to innovative manual training methods for tasks like retrieving a $65,000 bottle of water. Improved features include faster walking speed and emergency stop function.

Enhanced Robotics: Jeff the G1's Software Upgrades and LiDAR Integration

sentdex showcases upgrades to Jeff the G1's software stack, including RGB cameras, lidar for 3D mapping, and challenges with camera positioning and Ethernet connectivity. Embracing simplicity with Kiss ICP and Open3D, they navigate LiDAR integration for enhanced robotic exploration.

Unitry G1 Edu Ultimate B Review: Features, Pricing, and Development Potential

Explore the Unitry G1 edu Ultimate B humanoid robot in this in-depth review by sentdex. Discover its features, pricing, and development potential.

Unlocking Vibe Coding: Robot Hand Gestures and Version Control Explained

Explore the world of Vibe coding with sentdex as they push the boundaries of programming using language models. Discover the intricacies of robotic hand gestures and the importance of version control in this engaging tech journey.