Revolutionizing Object Detection: Moonream 2 in Jeff the G1 Series

- Authors

- Published on

- Published on

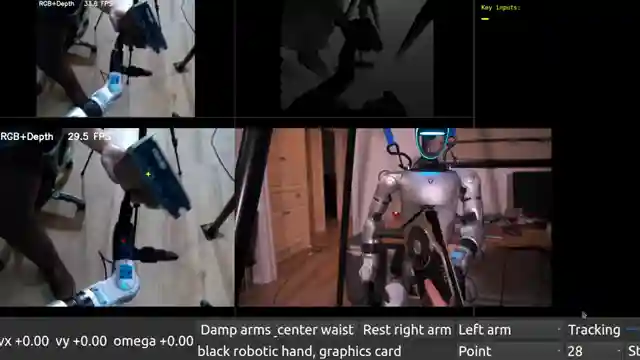

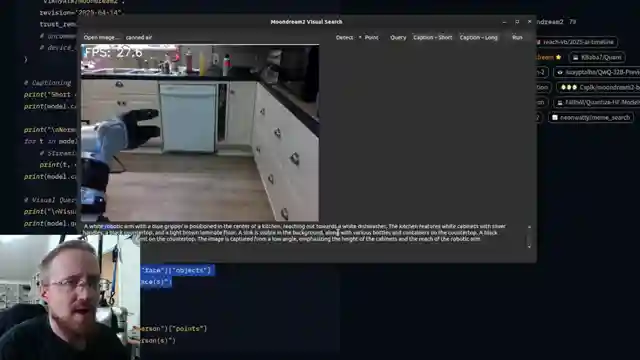

In the latest episode of the Jeff the G1 series, the team delves into the fascinating world of object detection using cutting-edge technology. By employing a Vision Language Model (VLM) like Moonream 2, they are able to track objects with incredible precision and speed. This VLM, with its two billion parameters, allows for real-time changes in object descriptions and locations, revolutionizing the way objects are tracked in XY space. The team showcases the VLM's power through a user-friendly GUI, demonstrating its ability to detect a wide range of objects based on natural language descriptions. From microwaves to canned air, the VLM proves its versatility and accuracy in identifying objects with lightning speed.

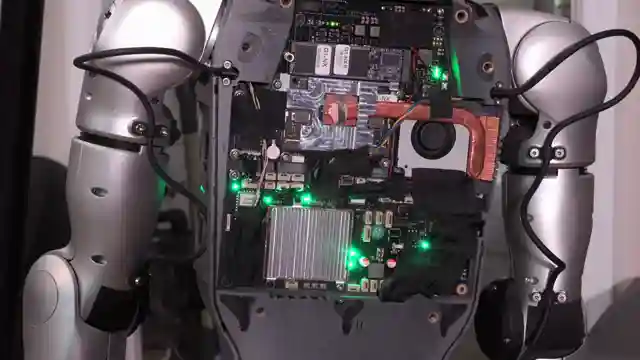

Despite the impressive capabilities of the VLM, the team faces challenges such as optimizing the arm's movement speed and improving accuracy by adding colored tape to objects like the robotic hand. They also explore the impact of camera positioning on visibility and the need for depth measurements from the hand's perspective. Troubleshooting issues with power supply and port connections provide valuable insights into the internal workings of the Unit G1, shedding light on the complexities of robotics technology. Through meticulous testing and experimentation, the team gains a deeper understanding of the intricacies involved in object detection and tracking.

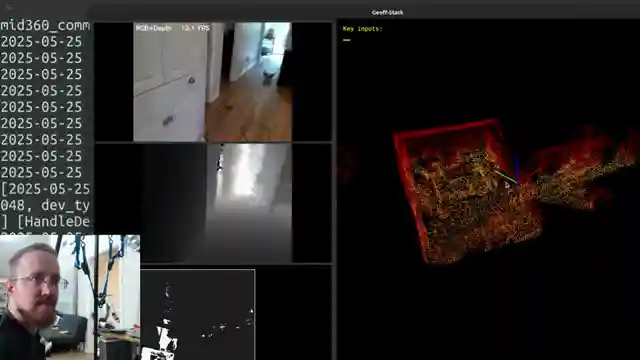

The team's dedication to pushing the boundaries of robotics technology is evident in their quest to retain SLAM technology while adjusting the camera angle for better visibility. By tackling these challenges head-on, they pave the way for future advancements in the field of robotics. The Jeff the G1 series serves as a testament to the team's relentless pursuit of innovation and their unwavering commitment to exploring the endless possibilities of VLM technology. With each episode, they bring us closer to a future where robots seamlessly interact with their surroundings, guided by the power of cutting-edge vision and language models.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch A bigger brain for the Unitree G1- Dev w/ G1 Humanoid P.4 on Youtube

Viewer Reactions for A bigger brain for the Unitree G1- Dev w/ G1 Humanoid P.4

Boston Dynamics released a new model for Atlas related to visual perception and planning actions based on environmental changes

Suggestions for using visual tracking algorithms, updating occupancy maps, and splitting voxels for better object detection

Excitement about the small head of the robot and potential advancements in future models

Interest in using LiDAR point cloud for physical modeling and collision detection

Suggestions to integrate MoveIT in ROS 2 for grabbing objects

Ideas for integrating the project with Groot and solving path planning issues

Questions about hand pathfinding without ML and the weight of the robot's arm

Comments on the use of metal actuators in the joints and the potential cloud-based nature of robots

Concerns about AI alignment and the alignment of systems with the law of humanity

Appreciation for the creator's work and videos, with some users finding them inspiring and exciting

Related Articles

Robotic Hand Control Innovations: Challenges and Solutions

Jeff the G1 demonstrates precise robotic hand control using a keyboard. Challenges with SDK limitations lead to innovative manual training methods for tasks like retrieving a $65,000 bottle of water. Improved features include faster walking speed and emergency stop function.

Enhanced Robotics: Jeff the G1's Software Upgrades and LiDAR Integration

sentdex showcases upgrades to Jeff the G1's software stack, including RGB cameras, lidar for 3D mapping, and challenges with camera positioning and Ethernet connectivity. Embracing simplicity with Kiss ICP and Open3D, they navigate LiDAR integration for enhanced robotic exploration.

Unitry G1 Edu Ultimate B Review: Features, Pricing, and Development Potential

Explore the Unitry G1 edu Ultimate B humanoid robot in this in-depth review by sentdex. Discover its features, pricing, and development potential.

Unlocking Vibe Coding: Robot Hand Gestures and Version Control Explained

Explore the world of Vibe coding with sentdex as they push the boundaries of programming using language models. Discover the intricacies of robotic hand gestures and the importance of version control in this engaging tech journey.