Revolutionize AI: Run Models Locally with Ollama for Cost-Efficiency

- Authors

- Published on

- Published on

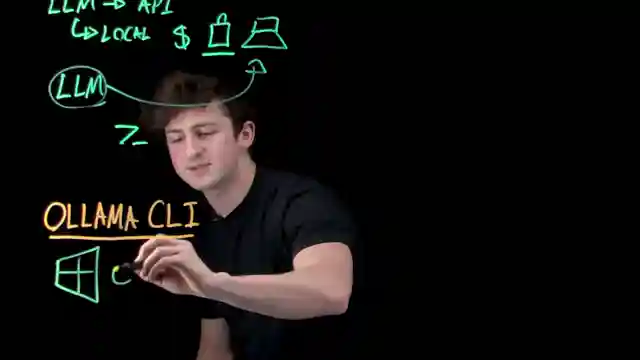

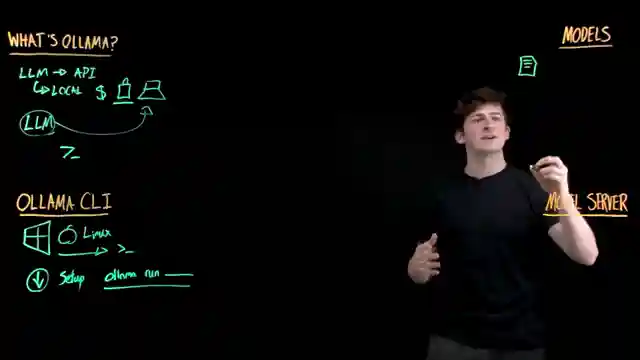

In this riveting episode by IBM Technology, they delve into the world of running AI models locally using the groundbreaking tool, Ollama. Forget about relying on cloud services for your AI needs - Ollama lets you take control, save on costs, and keep your precious data secure right on your own machine. It's like having a high-tech workshop in your garage, but for AI models! The team at IBM Technology showcases how Ollama's CLI simplifies the process, allowing you to download, run, and interact with models effortlessly through a single command. It's like having a supercharged engine at the tip of your fingers, ready to power up your AI endeavors.

Ollama doesn't just stop at basic functionality - it offers a diverse catalog of language models, multi-model embeddings, and tool-calling models to cater to a wide range of applications. It's like having a toolbox filled with every tool you could possibly need for any AI project. From conversational language models to intricate reasoning capabilities, Ollama has got you covered. The mention of popular models like llamas series and IBM's Granite model adds a touch of sophistication, showing that Ollama means serious business in the AI world. It's like having the sleekest, most powerful cars in your collection, ready to race at a moment's notice.

What sets Ollama apart is its innovative approach to model usage through the abstracted model file, making the whole process seamless and efficient. By passing requests through the Ollama server running locally, developers can focus on their projects without the hassle of complex setups. It's like having a skilled team of mechanics working behind the scenes, ensuring everything runs smoothly without a hitch. Ollama acts as the ultimate AI pit crew, handling requests and responses with precision and speed, whether you're working locally or remotely. It's like having a top-tier racing team supporting you every step of the way, making sure you reach the finish line in record time.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch What is Ollama? Running Local LLMs Made Simple on Youtube

Viewer Reactions for What is Ollama? Running Local LLMs Made Simple

Suggestions to create a video series on the topic

Request for a tutorial on running LLM's locally

Interest in learning about MCP

Mention of potential audio problems in the video

Concerns about the limitations of Ollama for enterprise-level use

Positive feedback on the video

Appreciation for the core engine of Ollama

Criticism of the UI and implementation of Ollama

Comment on the rejection of AI-generated content in various fields

Mention of using Ollama frequently

Related Articles

Mastering Identity Propagation in Agentic Systems: Strategies and Challenges

IBM Technology explores challenges in identity propagation within agentic systems. They discuss delegation patterns and strategies like OAuth 2, token exchange, and API gateways for secure data management.

AI vs. Human Thinking: Cognition Comparison by IBM Technology

IBM Technology explores the differences between artificial intelligence and human thinking in learning, processing, memory, reasoning, error tendencies, and embodiment. The comparison highlights unique approaches and challenges in cognition.

AI Job Impact Debate & Market Response: IBM Tech Analysis

Discover the debate on AI's impact on jobs in the latest IBM Technology episode. Experts discuss the potential for job transformation and the importance of AI literacy. The team also analyzes the market response to the Scale AI-Meta deal, prompting tech giants to rethink data strategies.

Enhancing Data Security in Enterprises: Strategies for Protecting Merged Data

IBM Technology explores data utilization in enterprises, focusing on business intelligence and AI. Strategies like data virtualization and birthright access are discussed to protect merged data, ensuring secure and efficient data access environments.