Mastering Self-Supervised Learning: Fine-Tuning DNOV2 on Unlabeled Meme Data

- Authors

- Published on

- Published on

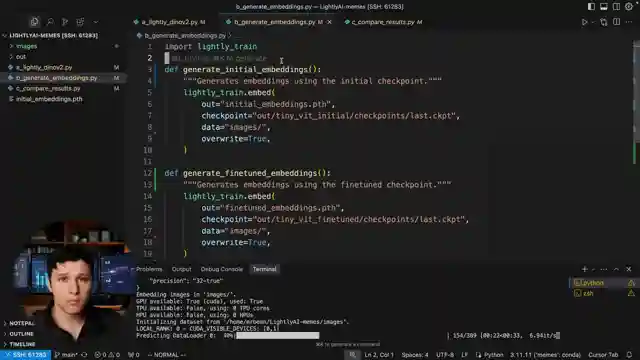

In this riveting episode, Aladdin Persson takes us on an exhilarating journey through the realm of self-supervised learning, armed with the powerful DNOV2 model and a plethora of unlabeled meme data. Teaming up with Lightly Train, they embark on a quest to fine-tune the model, a process made deceptively simple thanks to the innovative library. The key here? No labels required. As they delve into the code structure, a world of possibilities unfolds before our very eyes.

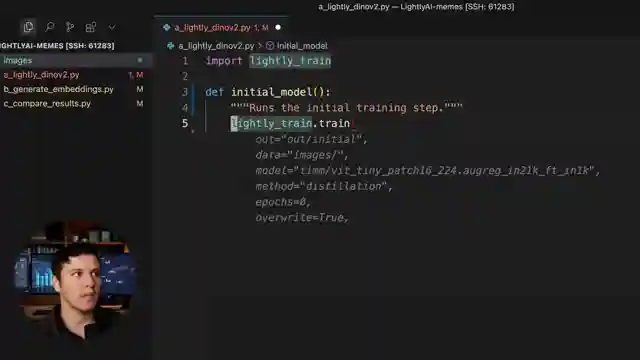

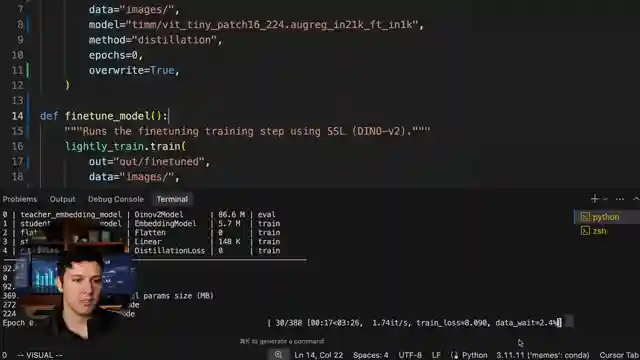

With a folder brimming with images, ranging from proprietary data to a treasure trove of meme gold, the team scripts their way through the fine-tuning process. From initializing the model to distilling knowledge through epochs, the journey is as enlightening as it is efficient. Leveraging the prowess of Tiny ViT and the magic of distillation, they craft a masterpiece of self-supervised learning.

As the model undergoes rigorous training, the team meticulously generates embeddings for both the initial and fine-tuned versions. The results? A visual feast of cosine similarities that showcase the model's evolution in capturing meme essence. From statues to chaotic scenes, the fine-tuned model unveils a new realm of meme template discovery. Aladdin's vision to integrate this cutting-edge approach into the Nani Meme project promises a future where meme recommendations transcend the boundaries of text-based queries. The video encapsulates the sheer power of wrappers like Lightly Train in simplifying complex tasks, making the impossible seem within reach.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Train Self-Supervised Models with LightlyTrain + DINOv2 on Youtube

Viewer Reactions for Train Self-Supervised Models with LightlyTrain + DINOv2

Great video!

Amazing content

Love the editing

Can't wait for the next one

This was so helpful

I learned a lot

Impressive work

Keep it up

Thank you for sharing

Really enjoyed watching

Related Articles

Revolutionizing Recommendations: 360 Brew's Game-Changing Decoder Model

Aladdin Persson explores a game-changing 150 billion parameter decoder-only model by the 360 Brew team at LinkedIn, revolutionizing personalized ranking and recommendation systems with superior performance and scalability.

Best Sleep Tracker: Whoop vs. Apple Watch - Data-Driven Insights

Discover the best sleep tracker as Andre Karpathy tests four devices over two months. Whoop reigns supreme, with Apple Watch ranking the lowest. Learn the importance of objective data in sleep tracking for optimal results.

Mastering Self-Supervised Learning: Fine-Tuning DNOV2 on Unlabeled Meme Data

Explore self-supervised learning with DNOV2 and unlabeled meme data in collaboration with Lightly Train. Fine-tune models effortlessly, generate embeddings, and compare results. Witness the power of self-supervised learning in meme template discovery and potential for innovative projects.

Unveiling Llama 4: AI Innovation and Performance Comparison

Explore the cutting-edge Llama 4 models in Aladdin Persson's latest video. Behemoth, Maverick, and Scout offer groundbreaking AI innovation with unique features and performance comparisons, setting new standards in the industry.