Mastering Rack System Evaluation with Ragus on NeuralNine

- Authors

- Published on

- Published on

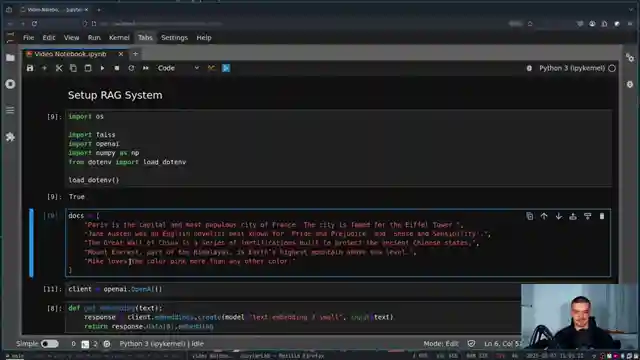

Today on NeuralNine, we delve into the intricate world of evaluating rack systems in Python using the enigmatic Ragus package. This tool allows us to scrutinize the quality of LLM responses based on context, a task as complex as navigating a labyrinth in a thunderstorm. NeuralNine guides us through setting up Jupiter Lab and installing the essential dependencies like phase CPU, phase GPU, open AI, Python, datasets, Ragus, and langchain, akin to preparing a high-performance vehicle for a challenging race.

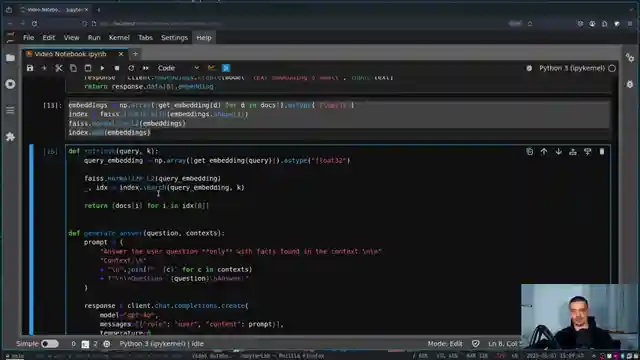

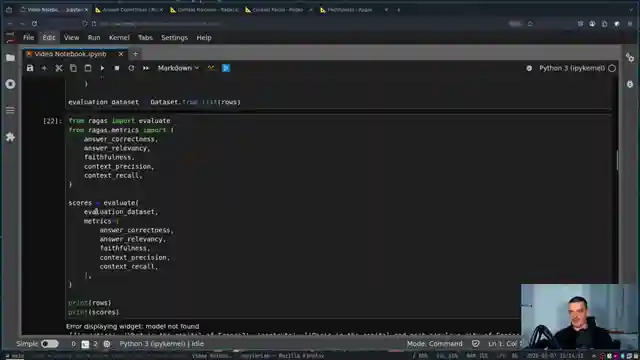

The team showcases a rudimentary rack system comprising a knowledge base and large language models for question answering, akin to assembling the components of a powerful engine. The spotlight then shifts to the critical evaluation phase, where Ragus steps in to assess the accuracy, correctness, relevance, and faithfulness of responses to the context, akin to a meticulous judge scrutinizing every detail of a high-stakes competition. By crafting a dataset with questions, ground truths, retrieved context, model answers, and expected correct answers, NeuralNine demonstrates the meticulous process of evaluating responses with Ragus, akin to fine-tuning a precision instrument for optimal performance.

Ragus metrics such as answer correctness, answer relevancy, faithfulness, context precision, and context recall are elucidated with links to detailed calculations, akin to deciphering the intricate specifications of a cutting-edge technology. The team underscores the reliance on large language models for accurate data in evaluating responses, emphasizing the symbiotic relationship between LLMs and the evaluation process, akin to a master craftsman relying on the finest tools to create a masterpiece. The importance of an N file containing an OpenAI API key for system compatibility is highlighted, akin to the key to unlocking a treasure trove of possibilities in the vast realm of AI evaluation.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Evaluate AI Agents in Python with Ragas on Youtube

Viewer Reactions for Evaluate AI Agents in Python with Ragas

Viewer learning about ragas and finding it useful for a project

Comparison of pronunciation of "raga" with Indian classical music

Request for a video about dotdrop

Viewer listening to ragas music

Related Articles

Building Stock Prediction Tool: PyTorch, Fast API, React & Warp Tutorial

NeuralNine constructs a stock prediction tool using PyTorch, Fast API, React, and Warp. The tutorial showcases training the model, building the backend, and deploying the application with Docker. Witness the power of AI in predicting stock prices with this comprehensive guide.

Exploring Arch Linux: Customization, Updates, and Troubleshooting Tips

NeuralNine explores the switch to Arch Linux for cutting-edge updates and customization, detailing the manual setup process, troubleshooting tips, and the benefits of the Arch User Repository.

Master Application Monitoring: Prometheus & Graphfana Tutorial

Learn to monitor applications professionally using Prometheus and Graphfana in Python with NeuralNine. This tutorial guides you through setting up a Flask app, tracking metrics, handling exceptions, and visualizing data. Dive into the world of application monitoring with this comprehensive guide.

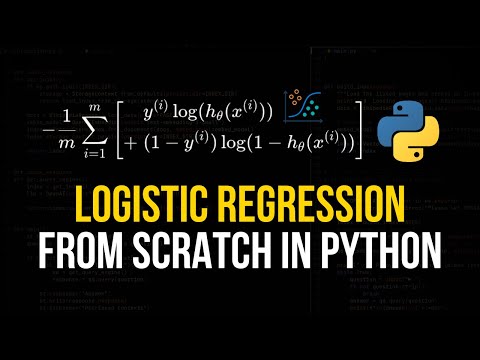

Mastering Logistic Regression: Python Implementation for Precise Class Predictions

NeuralNine explores logistic regression, a classification algorithm revealing probabilities for class indices. From parameters to sigmoid functions, dive into the mathematical depths for accurate predictions in Python.