Mastering Model Confidence: Python Evaluation with NeuralNine

- Authors

- Published on

- Published on

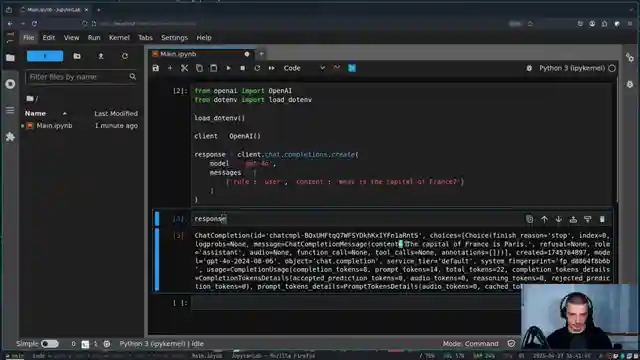

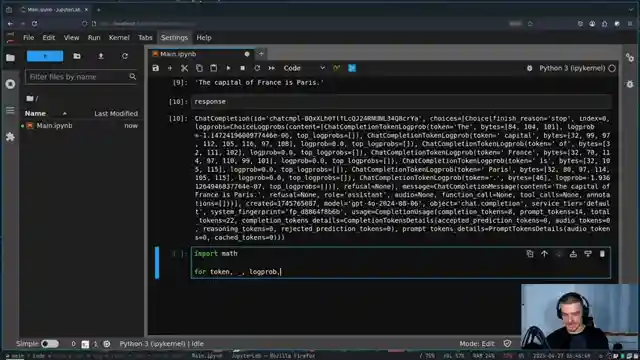

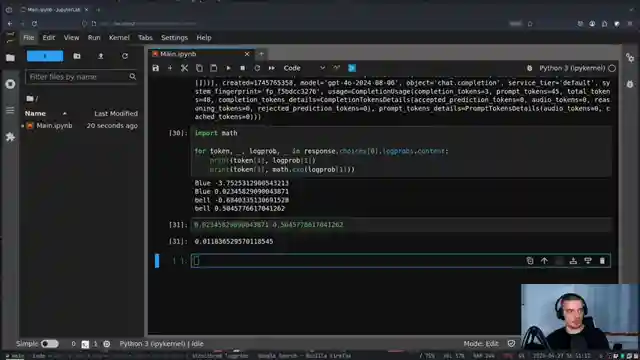

In this riveting episode by NeuralNine, we embark on a thrilling journey into the realm of large language models and their confidence evaluation. The team dives headfirst into Python wizardry, exploring the intricacies of structured output assessment. Armed with the OpenAI package and a touch of Python magic, they unveil the secrets of model confidence assessment. By scrutinizing log probabilities for each token, they decipher the model's certainty in delivering accurate responses.

But wait, there's more! The adventure takes a daring turn as they introduce the Piantic and structured log props packages, elevating the stakes in probability aggregation for structured output. With these tools at their disposal, the team crafts a schema for extracting vital information, like a maestro conducting a symphony of data. The stage is set for a showdown of wits between man and machine, as they push the boundaries of information extraction.

As the saga unfolds, the team showcases their prowess by defining a model for person information retrieval, setting the scene for a grand unveiling of structured data extraction. With fields like name, age, job, and favorite color at their fingertips, they navigate the labyrinth of model responses with finesse. Through meticulous schema design and field descriptions, they ensure precision in extracting essential details, like a skilled artisan sculpting a masterpiece.

In a breathtaking climax, NeuralNine unveils a world where structured output meets probability aggregation, a realm where data extraction transcends mere information retrieval. With the tools of Piantic and structured log props in hand, they navigate the treacherous waters of model confidence assessment with unmatched skill and precision. Join them on this adrenaline-fueled ride through the heart of Python programming, where every line of code is a step closer to unraveling the mysteries of large language models.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch How to Measure LLM Confidence: Logprobs & Structured Output on Youtube

Viewer Reactions for How to Measure LLM Confidence: Logprobs & Structured Output

Positive feedback on teaching style and knowledge sharing

Viewer from Pakistan sending love

Error message mentioned in the video regarding 'NoneType' object attribute

Request for assistance with specific code snippet

Appreciation for the content shared in the video

Gratitude for the information provided

Mention of the country Canada

Positive comment on the video

Compliment on the teaching approach

Error encountered while running code snippet

Related Articles

Building Stock Prediction Tool: PyTorch, Fast API, React & Warp Tutorial

NeuralNine constructs a stock prediction tool using PyTorch, Fast API, React, and Warp. The tutorial showcases training the model, building the backend, and deploying the application with Docker. Witness the power of AI in predicting stock prices with this comprehensive guide.

Exploring Arch Linux: Customization, Updates, and Troubleshooting Tips

NeuralNine explores the switch to Arch Linux for cutting-edge updates and customization, detailing the manual setup process, troubleshooting tips, and the benefits of the Arch User Repository.

Master Application Monitoring: Prometheus & Graphfana Tutorial

Learn to monitor applications professionally using Prometheus and Graphfana in Python with NeuralNine. This tutorial guides you through setting up a Flask app, tracking metrics, handling exceptions, and visualizing data. Dive into the world of application monitoring with this comprehensive guide.

Mastering Logistic Regression: Python Implementation for Precise Class Predictions

NeuralNine explores logistic regression, a classification algorithm revealing probabilities for class indices. From parameters to sigmoid functions, dive into the mathematical depths for accurate predictions in Python.