Mastering Conversational Memory in Chatbots with Langchain 0.3

- Authors

- Published on

- Published on

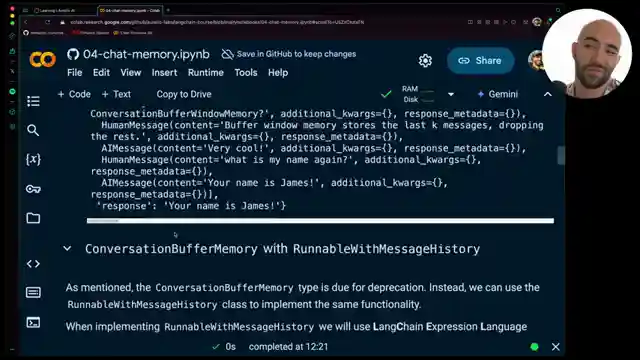

In this thrilling Langchain episode, the team delves deep into the intricate world of conversational memory, a vital component for chatbots and agents. They explore the evolution of core chat memory components within Langchain, emphasizing the significance of historic conversational memory utilities in the latest version, 0.3. Without this crucial feature, chatbots would lack the ability to recall past interactions, rendering them less conversational and more robotic. The team meticulously dissects four memory types: conversational buffer memory, conversation buffer window memory, conversational summary memory, and conversational summary buffer memory, each playing a unique role in enhancing the conversational experience.

Transitioning from outdated methods to the cutting-edge "runnable with message history" approach, the team embarks on a journey to rewrite these memory types for the modern Langchain era. By setting up a system prompt and defining the runnable with message history class, they seamlessly integrate chat history into the conversation flow. The session ID ensures each conversation is distinct, avoiding confusion in multi-user scenarios. Through a meticulous demonstration, the team showcases how the new approach retains the essence of conversational memory while aligning with the latest Langchain standards.

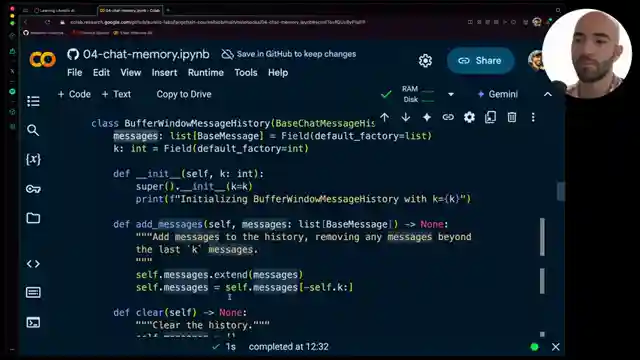

Furthermore, the video sheds light on the implementation of the conversation buffer window memory, a method that selectively retains the most recent messages to optimize response time and cost. By striking a delicate balance between the number of messages stored and operational efficiency, Langchain users can experience smoother interactions without compromising on quality. The team contrasts the deprecated techniques with the updated methodologies, underscoring the advantages and drawbacks of each in preserving the context of conversations.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Conversational Memory in LangChain for 2025 on Youtube

Viewer Reactions for Conversational Memory in LangChain for 2025

Prompt caching using ChatBedrockConverse and ChatPromptTemplate

Article and code for further information: https://www.aurelio.ai/learn/langchain-conversational-memory

Related Articles

Exploring AI Agents and Tools in Lang Chain: A Deep Dive

Lang Chain explores AI agents and tools, crucial for enhancing language models. The video showcases creating tools, agent construction, and parallel tool execution, offering insights into the intricate world of AI development.

Mastering Conversational Memory in Chatbots with Langchain 0.3

Langchain explores conversational memory in chatbots, covering core components and memory types like buffer and summary memory. They transition to a modern approach, "runnable with message history," ensuring seamless integration of chat history for enhanced conversational experiences.

Mastering AI Prompts: Lang Chain's Guide to Optimal Model Performance

Lang Chain explores the crucial role of prompts in AI models, guiding users through the process of structuring effective prompts and invoking models for optimal performance. The video also touches on future prompting for smaller models, enhancing adaptability and efficiency.

Enhancing AI Observability with Langmith and Linesmith

Langmith, part of Lang Chain, offers AI observability for LMS and agents. Linesmith simplifies setup, tracks activities, and provides valuable insights with minimal effort. Obtain an API key for access to tracing projects and detailed information. Enhance observability by making functions traceable and utilizing filtering options in Linesmith.