Enhancing LLM Performance: IBM's Retrieval Augmented Fine Tuning

- Authors

- Published on

- Published on

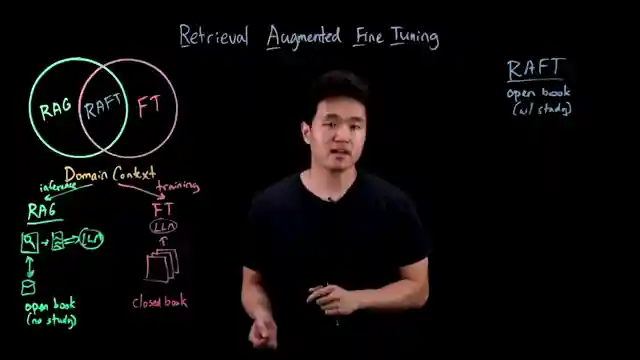

In this riveting video from the IBM Technology channel, we delve into the world of retrieval augmented fine tuning, a groundbreaking approach that merges the strengths of retrieval augmented generation and fine tuning to supercharge LLM performance in specialized domains. Developed by the brilliant minds at UC Berkeley, this technique, known as RAF, introduces a unique fine-tuning method to elevate RAG performance within specific contexts. While traditional RAG offers contextual input during inference by leveraging a retriever to scour relevant documents in a vector database, fine tuning takes a different route by providing context during training through a comprehensive label dataset to imbue domain-specific knowledge into a pre-trained LLM.

Picture this: fine tuning akin to preparing for a closed-book exam, where you must memorize all the material beforehand as you can't rely on external resources. On the other hand, RAG is like facing an open-book exam without studying, hoping that access to resources will save the day. But the real magic lies in RAF, which sets you up for an open-book exam that you've diligently prepared for - a win-win scenario where the model learns how to utilize external documents effectively to generate answers. It's the age-old adage of teaching a man to fish rather than just handing him a fish, and RAF embodies this philosophy by empowering the model to search for and generate responses rather than simply providing solutions.

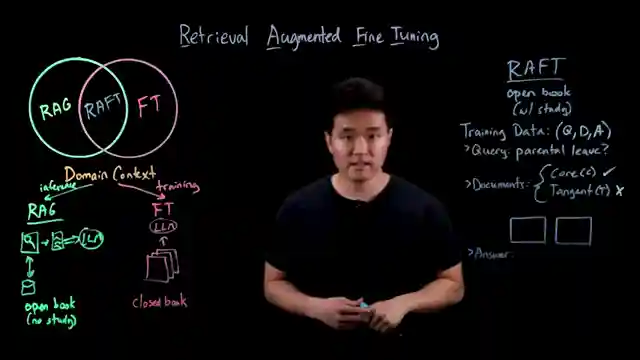

The implementation of RAF involves crafting a robust training dataset comprising queries, sets of documents, and answers. By incorporating core and tangent documents, the model learns to sift through information relevant to the query while disregarding irrelevant data, fostering accurate responses. The framework also includes document sets devoid of any relevant documents, teaching the model when to rely on intrinsic knowledge and when to admit uncertainty, thus minimizing errors. Through chain of thought reasoning, the model navigates through core documents systematically, enhancing transparency and reducing overfitting, culminating in a highly scalable and resilient model tailored for enterprise tasks.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch What is Retrieval-Augmented Fine-Tuning (RAFT)? on Youtube

Viewer Reactions for What is Retrieval-Augmented Fine-Tuning (RAFT)?

IBM drops potential secret to unlocking generically useful AI

Hybrid approach mentioned

Question about loss function in fine-tuning approach

RAFT usage and comparison to CAG

Missed opportunity to call it FART

Related Articles

Mastering Identity Propagation in Agentic Systems: Strategies and Challenges

IBM Technology explores challenges in identity propagation within agentic systems. They discuss delegation patterns and strategies like OAuth 2, token exchange, and API gateways for secure data management.

AI vs. Human Thinking: Cognition Comparison by IBM Technology

IBM Technology explores the differences between artificial intelligence and human thinking in learning, processing, memory, reasoning, error tendencies, and embodiment. The comparison highlights unique approaches and challenges in cognition.

AI Job Impact Debate & Market Response: IBM Tech Analysis

Discover the debate on AI's impact on jobs in the latest IBM Technology episode. Experts discuss the potential for job transformation and the importance of AI literacy. The team also analyzes the market response to the Scale AI-Meta deal, prompting tech giants to rethink data strategies.

Enhancing Data Security in Enterprises: Strategies for Protecting Merged Data

IBM Technology explores data utilization in enterprises, focusing on business intelligence and AI. Strategies like data virtualization and birthright access are discussed to protect merged data, ensuring secure and efficient data access environments.