Enhancing Data Retrieval: IBM's LangChain RAG for Up-to-Date Responses

- Authors

- Published on

- Published on

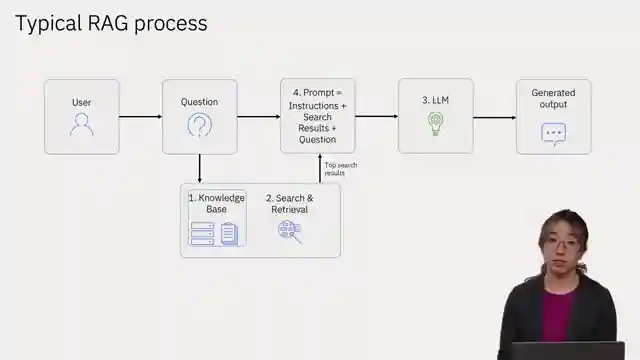

In this riveting IBM Technology episode, we delve into the world of using LangChain for a simple RAG example in Python. The team highlights the common issue with large language models like the IBM granite model, which sometimes lack the latest info, only trained up to 2021. To combat this, they introduce the game-changer: RAG (retrieval augmented generation). By adding a knowledge base, setting up a retriever, feeding the LLM the freshest content, and creating a prompt for questions, they revolutionize the way we interact with these models.

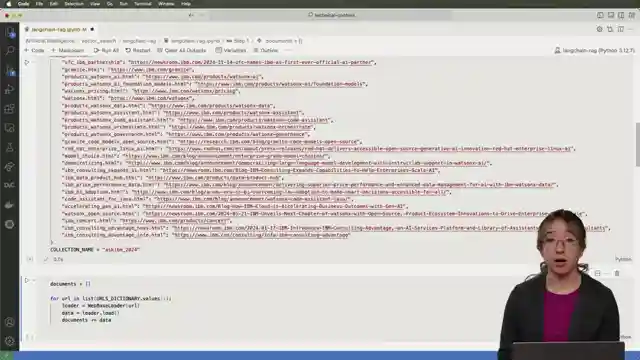

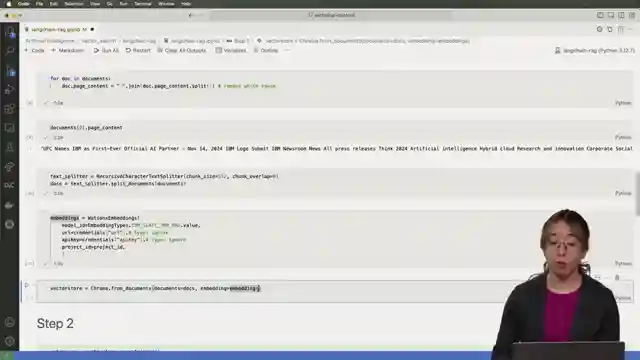

To kick things off, the crew walks us through the process, starting with obtaining an API key and project ID, importing essential libraries, and saving credentials. They then move on to gather data from IBM.com URLs to build a knowledge base, load documents using LangChain, and clean up the content for optimal performance. By chunking the data, vectorizing it using IBM's Slate model, and setting up a vector store as a retriever, they ensure the system is primed for action.

Next up, the team focuses on setting up the generative LLM, selecting the IBM Granite model, configuring the model parameters, and instantiating the LLM using watsonx. They then craft a prompt combining instructions, search results, and questions to provide context to the LLM. Finally, they demonstrate how to ask questions about the knowledge base, where the generative model processes the augmented context and user queries to deliver accurate responses. The model impressively tackles inquiries about the UFC announcement and IBM's services watsonx.data and watsonx.ai, showcasing the power of this innovative approach.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch LangChain RAG: Optimizing AI Models for Accurate Responses on Youtube

Viewer Reactions for LangChain RAG: Optimizing AI Models for Accurate Responses

Viewers appreciate IBM's way of presenting difficult concepts

The video is described as riveting and informative

The host is praised for breaking down concepts effectively

Positive feedback on the content about RAG

Thankfulness expressed towards IBM for the explanation

Request for more content

Question about the optimization part in the tutorial

Comment on the absence of ads on the IBM channel

Compliments to the host, Erica

General appreciation for the video and its clarity

Related Articles

Mastering Identity Propagation in Agentic Systems: Strategies and Challenges

IBM Technology explores challenges in identity propagation within agentic systems. They discuss delegation patterns and strategies like OAuth 2, token exchange, and API gateways for secure data management.

AI vs. Human Thinking: Cognition Comparison by IBM Technology

IBM Technology explores the differences between artificial intelligence and human thinking in learning, processing, memory, reasoning, error tendencies, and embodiment. The comparison highlights unique approaches and challenges in cognition.

AI Job Impact Debate & Market Response: IBM Tech Analysis

Discover the debate on AI's impact on jobs in the latest IBM Technology episode. Experts discuss the potential for job transformation and the importance of AI literacy. The team also analyzes the market response to the Scale AI-Meta deal, prompting tech giants to rethink data strategies.

Enhancing Data Security in Enterprises: Strategies for Protecting Merged Data

IBM Technology explores data utilization in enterprises, focusing on business intelligence and AI. Strategies like data virtualization and birthright access are discussed to protect merged data, ensuring secure and efficient data access environments.