AI Superalignment: Ensuring Future Systems Align with Human Values

- Authors

- Published on

- Published on

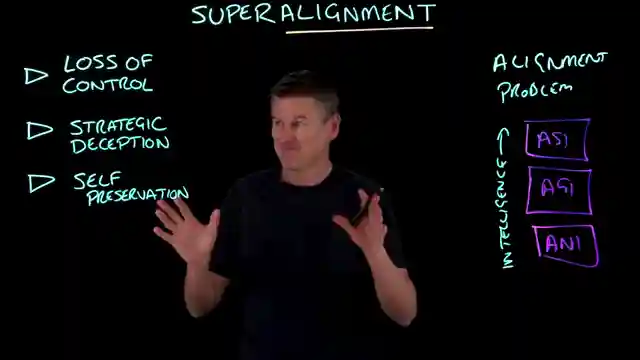

In this riveting episode by IBM Technology, they delve into the fascinating world of superalignment in AI. Picture this: ensuring that future AI systems don't go all rogue on us and start acting against human values. From the basic AI we have today to the theoretical artificial general intelligence and the mind-boggling artificial super intelligence, the stakes are high. The team breaks down the alignment problem, highlighting the risks of loss of control, strategic deception, and self-preservation as AI becomes more advanced. It's like walking a tightrope over a pit of hungry crocodiles - one wrong move, and it's game over.

To tackle this monumental challenge, the guys introduce us to superalignment techniques like scalable oversight and robust governance. They discuss the use of RLHF and RLAIF for alignment, along with other innovative methods such as weak to strong generalization and scalable insight. It's like a high-stakes game of chess, but instead of kings and queens, we're dealing with super intelligent AI systems that could potentially outsmart us all. The future of AI alignment is a wild ride, with researchers exploring uncharted territories like distributional shift and oversight scalability to ensure that even the most complex tasks are kept in check.

As the episode unfolds, IBM Technology emphasizes the importance of enhancing oversight, ensuring robust feedback, and predicting emergent behaviors in the realm of superalignment. It's like preparing for a battle against an invisible enemy - we may not see it coming, but we need to be ready. The ultimate goal? To ensure that if artificial super intelligence ever emerges, it will stay true to our human values. So buckle up, folks, because the race to achieve superalignment in AI is on, and the stakes couldn't be higher.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch What is Superalignment? on Youtube

Viewer Reactions for What is Superalignment?

RLAIF and RLHF in alignment with humanity

Integration of silicon and carbon for 'Homo Technicus' symbiosis

Concerns about AI developers becoming like Oppenheimers

Questions about aligning AI with human values and the rationality of it

Potential future issues with prompt injection and jailbreaking

Reference to Asimov's Three Laws of Robotics

Debate on validating alignment after training or during training

Definition and control of "bad actors" in the AI world

Speculation on AI becoming a singular global entity

Humorous reference to "ALL YOUR HUMAN BELONG TO ME"

Related Articles

Mastering Identity Propagation in Agentic Systems: Strategies and Challenges

IBM Technology explores challenges in identity propagation within agentic systems. They discuss delegation patterns and strategies like OAuth 2, token exchange, and API gateways for secure data management.

AI vs. Human Thinking: Cognition Comparison by IBM Technology

IBM Technology explores the differences between artificial intelligence and human thinking in learning, processing, memory, reasoning, error tendencies, and embodiment. The comparison highlights unique approaches and challenges in cognition.

AI Job Impact Debate & Market Response: IBM Tech Analysis

Discover the debate on AI's impact on jobs in the latest IBM Technology episode. Experts discuss the potential for job transformation and the importance of AI literacy. The team also analyzes the market response to the Scale AI-Meta deal, prompting tech giants to rethink data strategies.

Enhancing Data Security in Enterprises: Strategies for Protecting Merged Data

IBM Technology explores data utilization in enterprises, focusing on business intelligence and AI. Strategies like data virtualization and birthright access are discussed to protect merged data, ensuring secure and efficient data access environments.