Unveiling the 7 Billion Parameter Coding Marvel: All Hands Model

- Authors

- Published on

- Published on

In this riveting showcase, the 1littlecoder team unveils the groundbreaking 7 billion parameter model, a coding marvel that leaves its 32 billion parameter predecessor in the dust on the SWB benchmark, cracking a remarkable 37% of coding conundrums. Developed by the coding virtuosos at All Hands, this model, aptly named Open Hands, is a game-changer in the realm of programming tasks, boasting a robust 128,000 context window model that sets it apart from the competition. Surpassing the likes of DeepSeek V3 and the GPT-3, this coding powerhouse notches an impressive 37% on the SWB benchmark, a feat that defies the norms of traditional benchmarks.

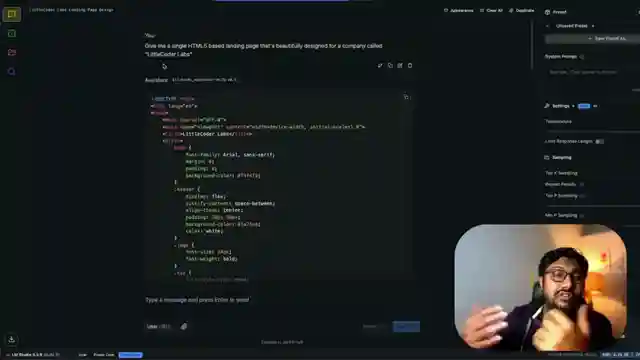

But don't be fooled by the colossal context window size, as practical local usage may not demand its full capacity, offering a glimpse into the model's adaptability and efficiency. Accessible on Hugging Face, the 7 billion parameter version stands ready for local deployment, promising a seamless coding experience. From crafting HTML pages to p5.js animations and Pygame Python code, this model flexes its coding muscles with finesse, delivering results that are as impressive as they are practical. And let's not forget its prowess in tackling real-time Stack Overflow queries, providing timely solutions to pandas and regular expression challenges with ease.

With the model accessible through LM Studio, coding aficionados can revel in the convenience of local coding assistance without compromising on performance or data security. The 1littlecoder team invites enthusiasts to explore this cutting-edge model, inviting feedback and insights on its performance in the wild. So buckle up, dive into the world of coding with this revolutionary model, and brace yourself for a coding experience like never before.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch This VIBECODING LLM Runs LOCALLY! 🤯 on Youtube

Viewer Reactions for This VIBECODING LLM Runs LOCALLY! 🤯

Appreciation for the information, analysis, and presentation

Comment on the training data being from October 2023

Impressed by the capabilities of the AI model

Mention of the ball and box

Question about the camera being used

Inquiry about hardware requirements

Mention of limitations shown in the video

Hope for the AI to create a fully working snake game

Positive feedback with emojis

Suggestion to use other frameworks before making adjustments with this AI model

Related Articles

Revolutionizing Music Creation: Google's Magenta Real Time Model

Discover Magenta, a cutting-edge music generation model from Google deep mind. With 800 million parameters, Magenta offers real-time music creation on Google Collab TPU. Available on Hugging Face, this AI innovation is revolutionizing music production.

Nanits OCRS Model: Free Optical Character Recognition Tool Outshines Competition

Discover Nanits' OCRS model, a powerful optical character recognition tool fine-tuned from Quinn 2.5 VLM. This free model outshines Mistral AI's paid OCR API, excelling in latex equation recognition, image description, signature detection, and watermark extraction. Accessible via Google Collab, it offers seamless conversion of documents to markdown format. Experience the future of OCR technology with Nanits.

Revolutionizing Voice Technology: Chatterbox by Resemble EI

Resemble EI's Chatterbox, a half-billion parameter model licensed under MIT, excels in text-to-speech and voice cloning. Users can adjust parameters like pace and exaggeration for customized output. The model outperforms competitors, making it ideal for diverse voice applications. Subscribe to 1littlecoder for more insights.

Unlock Productivity: Google AI Studio's Branching Feature Revealed

Discover the hidden Google AI studio feature called branching on 1littlecoder. This revolutionary tool allows users to create different conversation timelines, boosting productivity and enabling flexible communication. Branching is a game-changer for saving time and enhancing learning experiences.