Unveiling Quen 3: Multilingual Models with Enhanced Tool Use Capabilities

- Authors

- Published on

- Published on

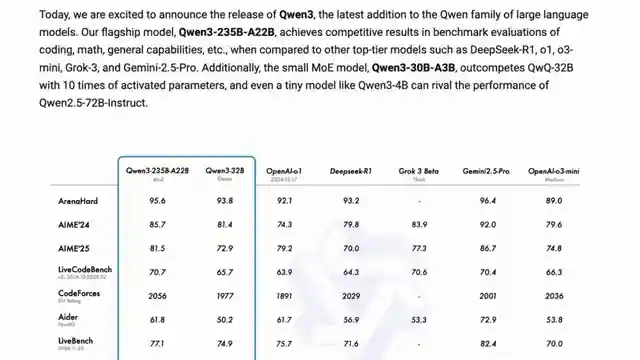

In a bold move reminiscent of a high-octane race start, the Quen team has unleashed Quen 3 onto the scene, a powerhouse family of models ranging from 6 billion to a staggering 235 billion parameters. This isn't just a release; it's a full-blown model extravaganza, with a lineup that could make even the most seasoned tech enthusiasts weak at the knees. Unlike the conventional approach of trickling out models in stages, Quen has gone all-in with a single massive drop, showcasing their confidence in the capabilities of their creations.

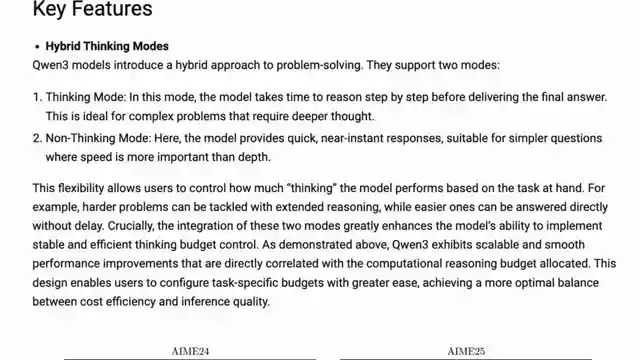

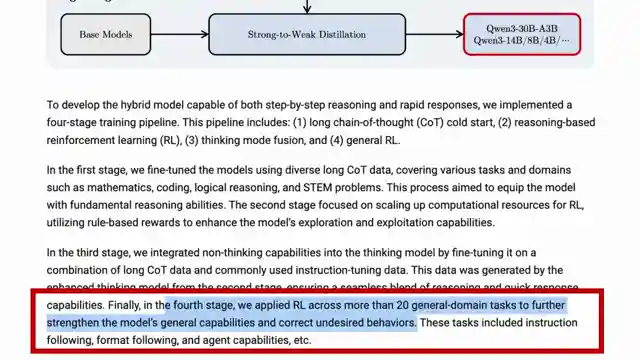

What sets these models apart, you ask? Well, strap in, because Quen isn't holding back. From multilingual support for 119 languages to enhanced tool use capabilities, these models are geared to revolutionize the way we interact with AI. The introduction of thinking modes adds a dynamic element, allowing users to fine-tune the model's reasoning prowess to suit their needs. It's like giving a supercar the ability to adjust its handling on the fly - pure innovation at its finest.

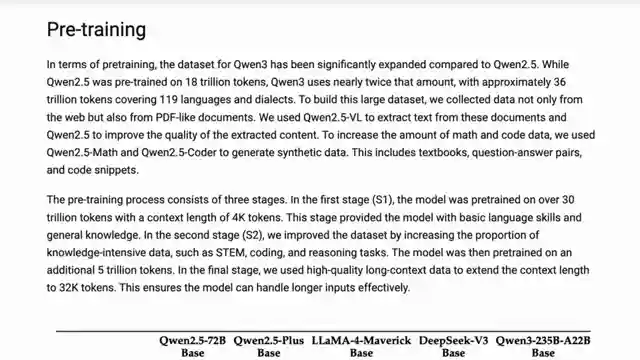

But wait, there's more. The training process behind these models is a feat in itself, with a mind-boggling 36 trillion tokens used for pre-training across various languages. The incorporation of synthetic data underscores Quen's commitment to pushing the boundaries of AI development. As users dive into the world of Quen 3 at chat.quen.ai, they're met with a playground of possibilities, where they can witness firsthand the models' thinking modes in action. It's not just about generating responses; it's about unleashing a new era of AI interaction that promises to redefine the status quo.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Introducing the Qwen 3 Family on Youtube

Viewer Reactions for Introducing the Qwen 3 Family

Expectations for R2 are high due to the impressive metrics of the models

The formatting and structure of the answers generated by the model are praised

Questions about whether the model is multimodal

Appreciation for the informative video without clickbait

Request for a video on the best way to train a model

Excitement to see the model on Groq and SambaNova

Inquiries about the modality of the model

Discussion on prompt injection "attack" in relation to the model

Request for a solution to getting a "local Claude"

Issues with downloading quantized versions of models from ollama due to possible internet or traffic problems

Related Articles

Unleashing Gemini CLI: Google's Free AI Coding Tool

Discover the Gemini CLI by Google and the Gemini team. This free tool offers 60 requests per minute and 1,000 requests per day, empowering users with AI-assisted coding capabilities. Explore its features, from grounding prompts in Google Search to using various MCPS for seamless project management.

Nanet's OCR Small: Advanced Features for Specialized Document Processing

Nanet's OCR Small, based on Quen 2.5VL, offers advanced features like equation recognition, signature detection, and table extraction. This model excels in specialized OCR tasks, showcasing superior performance and versatility in document processing.

Revolutionizing Language Processing: Quen's Flexible Text Embeddings

Quen introduces cutting-edge text embeddings on HuggingFace, offering flexibility and customization. Ranging from 6B to 8B in size, these models excel in benchmarks and support instruction-based embeddings and reranking. Accessible for local or cloud use, Quen's models pave the way for efficient and dynamic language processing.

Unleashing Chatterbox TTS: Voice Cloning & Emotion Control Revolution

Discover Resemble AI's Chatterbox TTS model, revolutionizing voice cloning and emotion control with 500M parameters. Easily clone voices, adjust emotion levels, and verify authenticity with watermarks. A versatile and user-friendly tool for personalized audio content creation.