Unveiling Gemini 2.5 Pro: Benchmark Dominance and Interpretability Insights

- Authors

- Published on

- Published on

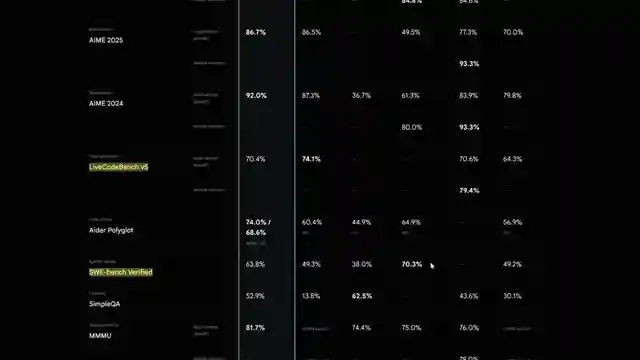

In a riveting update from AI Explained, the team unveils the latest feats of Gemini 2.5 Pro, a powerhouse in the AI realm. They dive headfirst into the world of benchmarks, showcasing Gemini's prowess in dissecting intricate sci-fi narratives like never before. With a keen eye for detail, they dissect Gemini's performance across various benchmarks, highlighting its dominance in tasks requiring extensive contextual understanding. Not to be outdone, Gemini's practicality on Google AI Studio is underscored, boasting a knowledge cutoff date that leaves competitors in the dust.

But wait, there's more! The team delves into Gemini's coding capabilities, dissecting its performance on Live Codebench and Swebench Verified with surgical precision. A standout moment arises as Gemini shines in the ML benchmark, clinching the top spot effortlessly. The real showstopper? Gemini's groundbreaking performance on SimpleBench, where it outshines all others with a staggering 51.6% score, setting a new standard in the AI arena.

Peeling back the layers, the team uncovers Gemini's unique approach to answering questions, showcasing its knack for reverse engineering solutions with finesse. The discussion takes a thrilling turn as they delve into a recent interpretability paper from Anthropic, shedding light on the inner workings of language models when faced with daunting challenges. With tantalizing hints of exclusive content on their Patreon, AI Explained promises a deeper dive into the AI landscape, offering enthusiasts a front-row seat to the cutting-edge developments in the field.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Gemini 2.5 Pro - It’s a Darn Smart Chatbot … (New Simple High Score) on Youtube

Viewer Reactions for Gemini 2.5 Pro - It’s a Darn Smart Chatbot … (New Simple High Score)

Gemini 2.5 Pro's capabilities in coding and understanding complex tasks

Gemini 2.5 Pro's ability to handle MP3s and write detailed reviews

Comparison of Gemini 2.5 Pro with other models like Claude 3.7 and Sonnet 3.7

User experiences with Gemini 2.5 Pro in various tasks and discussions

Comments on the intellectual capabilities of LLMs and model makers' focus on MoEs

Speculation on the consciousness and future advancements of AI

Appreciation for the transparency in benchmark evaluations

Excitement and anticipation for Google's advancements in AI

Curiosity about Gemini 2.5 Pro's features, such as analyzing YouTube videos

Comparison of Gemini 2.5 Pro with other AI chat tools and its writing style

Related Articles

AI Limitations Unveiled: Apple Paper Analysis & Model Recommendations

AI Explained dissects the Apple paper revealing AI models' limitations in reasoning and computation. They caution against relying solely on benchmarks and recommend Google's Gemini 2.5 Pro for free model usage. The team also highlights the importance of considering performance in specific use cases and shares insights on a sponsorship collaboration with Storyblocks for enhanced production quality.

Google's Gemini 2.5 Pro: AI Dominance and Job Market Impact

Google's Gemini 2.5 Pro dominates AI benchmarks, surpassing competitors like Claude Opus 4. CEOs predict no AGI before 2030. Job market impact and AI automation explored. Emergent Mind tool revolutionizes AI models. AI's role in white-collar job future analyzed.

Revolutionizing Code Optimization: The Future with Alpha Evolve

Discover the groundbreaking Alpha Evolve from Google Deepmind, a coding agent revolutionizing code optimization. From state-of-the-art programs to data center efficiency, explore the future of AI innovation with Alpha Evolve.

Google's Latest AI Breakthroughs: V3, Gemini 2.5, and Beyond

Google's latest AI breakthroughs, from V3 with sound in videos to Gemini 2.5 Flash update, Gemini Live, and the Gemini diffusion model, showcase their dominance in the field. Additional features like AI mode, Jewels for coding, and the Imagine 4 text-to-image model further solidify Google's position as an AI powerhouse. The Synth ID detector, Gemmaverse models, and SGMema for sign language translation add depth to their impressive lineup. Stay tuned for the future of AI innovation!