Unlock Personalized Chats: Chat GPT's Memory Reference Feature Explained

- Authors

- Published on

- Published on

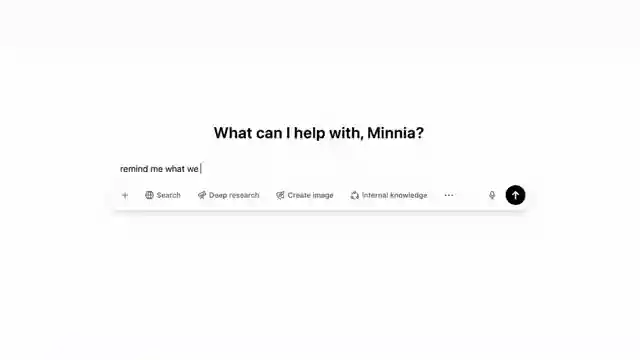

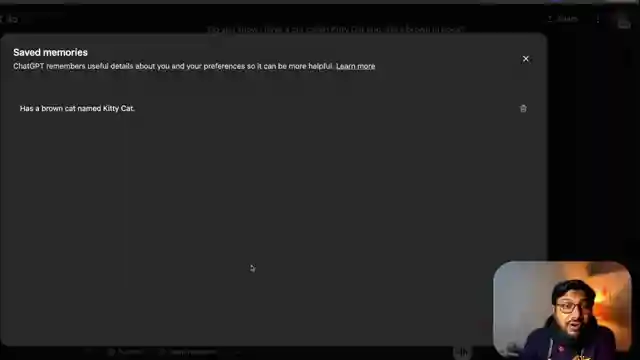

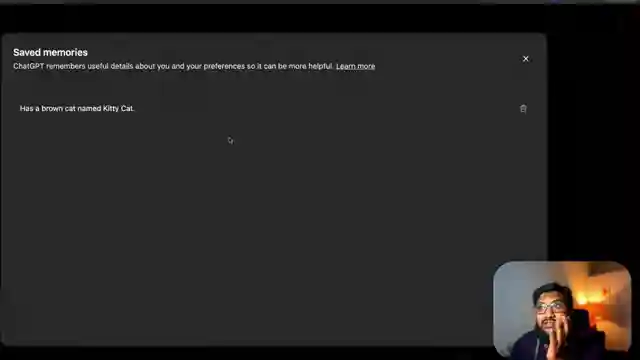

In a groundbreaking move, Chat GPT has rolled out a controversial feature known as Memory Reference, stirring up a storm among users. This new capability allows the AI to delve into your past interactions, learning from them to tailor responses. Some may find this intrusive, while others see it as a handy tool for a personalized chat experience. However, privacy concerns loom large as Chat GPT delves deeper into users' personal details, sparking a debate on where to draw the line.

The Memory Reference feature marks a significant shift in how Chat GPT operates, now utilizing stored memories to optimize responses based on user preferences. This move has garnered both praise and skepticism, with notable figures like Sam Altman voicing their reservations about the potential implications. Users can take control of their data by managing memories, either deleting them entirely or keeping certain conversations hidden from the AI's prying eyes.

For those who value privacy, the option to disable memory storage provides a sense of relief, ensuring that sensitive information remains off-limits to Chat GPT. On the flip side, enabling this feature opens up a world of possibilities for tailored responses and personalized interactions. The AI's ability to adapt and learn from past conversations adds a new layer of complexity to the user experience, blurring the lines between convenience and intrusion. As the debate rages on, the future of AI-driven communication hangs in the balance, with users grappling with the trade-offs between personalization and privacy in the digital age.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch The Internet Is Freaking Out Over ChatGPT’s New Feature, You can disable it! on Youtube

Viewer Reactions for The Internet Is Freaking Out Over ChatGPT’s New Feature, You can disable it!

New feature allows to reference all past conversations

Tech companies use behavioral data for recommendation systems

Some users find the feature similar to ChatGPT from last year

Concerns about privacy and data usage by OpenAI

Some users find the feature old or not new

Caution about potential risks of using temporary chats

Speculation about future features like profiles

Discussion about whether the feature is available to Plus users

Confusion about the purpose and impact of the feature

Mixed reactions to the feature, with some finding it annoying or wrong

Related Articles

Revolutionizing Music Creation: Google's Magenta Real Time Model

Discover Magenta, a cutting-edge music generation model from Google deep mind. With 800 million parameters, Magenta offers real-time music creation on Google Collab TPU. Available on Hugging Face, this AI innovation is revolutionizing music production.

Nanits OCRS Model: Free Optical Character Recognition Tool Outshines Competition

Discover Nanits' OCRS model, a powerful optical character recognition tool fine-tuned from Quinn 2.5 VLM. This free model outshines Mistral AI's paid OCR API, excelling in latex equation recognition, image description, signature detection, and watermark extraction. Accessible via Google Collab, it offers seamless conversion of documents to markdown format. Experience the future of OCR technology with Nanits.

Revolutionizing Voice Technology: Chatterbox by Resemble EI

Resemble EI's Chatterbox, a half-billion parameter model licensed under MIT, excels in text-to-speech and voice cloning. Users can adjust parameters like pace and exaggeration for customized output. The model outperforms competitors, making it ideal for diverse voice applications. Subscribe to 1littlecoder for more insights.

Unlock Productivity: Google AI Studio's Branching Feature Revealed

Discover the hidden Google AI studio feature called branching on 1littlecoder. This revolutionary tool allows users to create different conversation timelines, boosting productivity and enabling flexible communication. Branching is a game-changer for saving time and enhancing learning experiences.