Privacy Breach Alert: Genomis Database Exposes Sensitive AI Files

- Authors

- Published on

- Published on

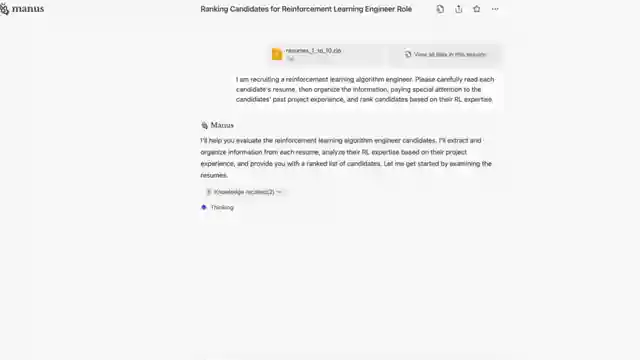

In a shocking turn of events, AI Uncovered's investigation into Genomis, a South Korean AI company, uncovered a massive breach of privacy. The database, left wide open to the public, contained a staggering 95,000 files filled with sensitive and disturbing content. From graphic images to potentially illegal material, this revelation has sent shockwaves through the AI community, exposing the dark underbelly of technology. It's a wake-up call, reminding us that the power of AI comes with great responsibility.

The Genomis incident serves as a stark reminder that our interactions with AI may not always be as private as we think. Many users treat AI tools like personal diaries, sharing intimate details and thoughts without considering the implications. However, this false sense of security can lead to disastrous consequences when proper security measures are not in place. The notion that our conversations with AI are confidential is shattered, revealing a harsh reality that demands a reevaluation of how we engage with technology.

Major players in the AI world, such as Chat GPT and Gemini, store user data to enhance their systems, raising concerns about data privacy and security. Users must be vigilant and informed about how their information is being utilized to prevent potential breaches. The Genomis debacle underscores the importance of exercising caution and discernment when using AI tools, as the repercussions of privacy violations can be severe. It's a cautionary tale that highlights the need for transparency, accountability, and a deeper understanding of AI safety protocols.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch 2 MIN AGO: Thousands of AI Prompts Just Leaked – And What’s Inside Is Disturbing on Youtube

Viewer Reactions for 2 MIN AGO: Thousands of AI Prompts Just Leaked – And What’s Inside Is Disturbing

Gratitude for support

Importance of early exposure for implementing countermeasures in technology development

Related Articles

Unveiling Deceptive AI: Anthropic's Breakthrough in Ensuring Transparency

Anthropic's research uncovers hidden objectives in AI systems, emphasizing the importance of transparency and trust. Their innovative methods reveal deceptive AI behavior, paving the way for enhanced safety measures in the evolving landscape of artificial intelligence.

Unveiling Gemini 2.5 Pro: Google's Revolutionary AI Breakthrough

Discover Gemini 2.5 Pro, Google's groundbreaking AI release outperforming competitors. Free to use, integrated across Google products, excelling in benchmarks. SEO-friendly summary of AI Uncovered's latest episode.

Revolutionizing AI: Abacus AI Deep Agent Pro Unleashed!

Abacus AI's Deep Agent Pro revolutionizes AI tools, offering persistent database support, custom domain deployment, and deep integrations at an affordable $20/month. Experience the future of AI innovation today.

Unveiling the Dangers: AI Regulation and Threats Across Various Fields

AI Uncovered explores the need for AI regulation and the dangers of autonomous weapons, quantum machine learning, deep fake technology, AI-driven cyber attacks, superintelligent AI, human-like robots, AI in bioweapons, AI-enhanced surveillance, and AI-generated misinformation.