Nvidia's Llama 3.1 Neatron Ultra 253 BV1: Outperforming Competitors in AI Tests

- Authors

- Published on

- Published on

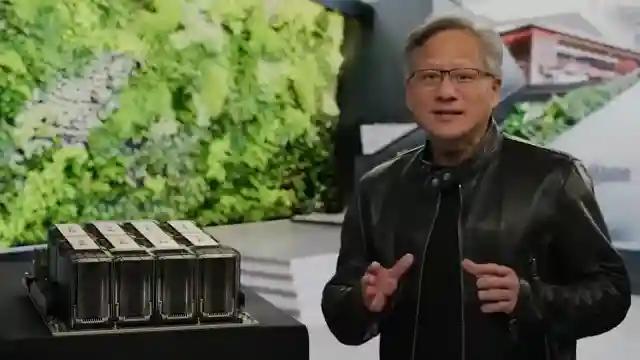

In a world where tech giants battle for AI supremacy, Nvidia quietly unleashes the Llama 3.1 Neatron Ultra 253 BV1 model, derived from Meta's Llama 3.145B. This beast flexes its 253 billion parameters, outshining the revered DeepSeek R1 in AI performance trials. It's like watching a scrappy underdog take down the reigning champ in a high-stakes showdown. Nvidia's model isn't just a pretty face; it's a powerhouse designed for tackling the toughest tasks and charming users with its natural responses.

Nvidia doesn't just drop the mic and walk away after unveiling the Llama 3.1 Neatron Ultra 253 BV1. They open the floodgates, sharing every detail from the code to the training data on Hugging Face. This move isn't just about bragging rights; it's a statement of Nvidia's commitment to collaboration and innovation. The model's ability to seamlessly switch between deep thinking and casual banter is like having a supercar that can transform into a luxury sedan at the push of a button.

Behind the scenes, Nvidia's engineers are pulling out all the stops to fine-tune this AI marvel. Through a rigorous training regimen involving supervised learning, reinforcement learning, and knowledge distillation, they mold the model into a well-oiled machine ready to tackle any challenge. And boy, does it deliver. When the Llama 3.1 Neatron Ultra 253 BV1 kicks into reasoning mode, it's like watching a Formula 1 car shift into high gear on a straightaway. It smashes through tests, leaving competitors in the dust and proving that you don't need a mountain of parameters to dominate the AI game.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Nvidia´s New Llama-3.1 Nemotron Just Crushed DeepSeek at HALF the Size! on Youtube

Viewer Reactions for Nvidia´s New Llama-3.1 Nemotron Just Crushed DeepSeek at HALF the Size!

Nvidia's Nemotron sets a new benchmark in AI efficiency

Smaller, smarter models are the future

Excitement to see its real-world impact

Comparison between 3.3 and 3.1

Related Articles

Unveiling Deceptive AI: Anthropic's Breakthrough in Ensuring Transparency

Anthropic's research uncovers hidden objectives in AI systems, emphasizing the importance of transparency and trust. Their innovative methods reveal deceptive AI behavior, paving the way for enhanced safety measures in the evolving landscape of artificial intelligence.

Unveiling Gemini 2.5 Pro: Google's Revolutionary AI Breakthrough

Discover Gemini 2.5 Pro, Google's groundbreaking AI release outperforming competitors. Free to use, integrated across Google products, excelling in benchmarks. SEO-friendly summary of AI Uncovered's latest episode.

Revolutionizing AI: Abacus AI Deep Agent Pro Unleashed!

Abacus AI's Deep Agent Pro revolutionizes AI tools, offering persistent database support, custom domain deployment, and deep integrations at an affordable $20/month. Experience the future of AI innovation today.

Unveiling the Dangers: AI Regulation and Threats Across Various Fields

AI Uncovered explores the need for AI regulation and the dangers of autonomous weapons, quantum machine learning, deep fake technology, AI-driven cyber attacks, superintelligent AI, human-like robots, AI in bioweapons, AI-enhanced surveillance, and AI-generated misinformation.