Jetson Orin Nano Deep Seek Testing: Performance, Python Code, Image Analysis & More!

- Authors

- Published on

- Published on

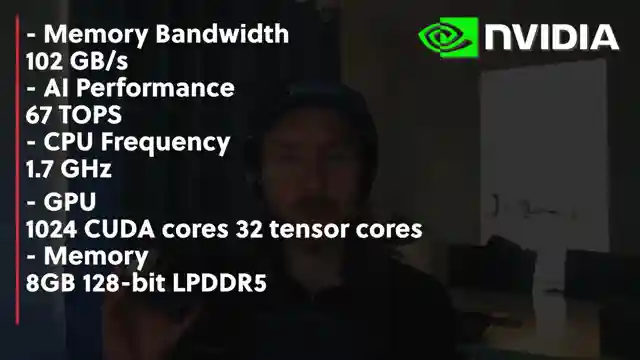

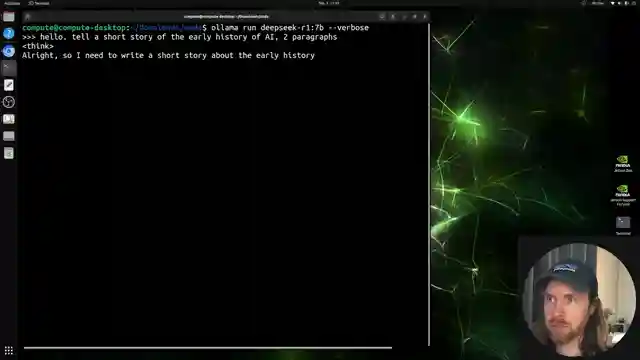

In today's thrilling episode, the All About AI team embarks on a heart-pounding mission to push the limits of the Jetson Orin Nano from Nvidia by running the powerful deep Seek. With a twinkle in their eyes, they dive into loading various deep R1 models using AMA, showcasing the impressive performance of this pint-sized powerhouse. Through a series of exhilarating tests, they uncover the true capabilities of this device, leaving them utterly impressed by its speed and efficiency. The screen lights up with the results, revealing token speeds that will make your head spin.

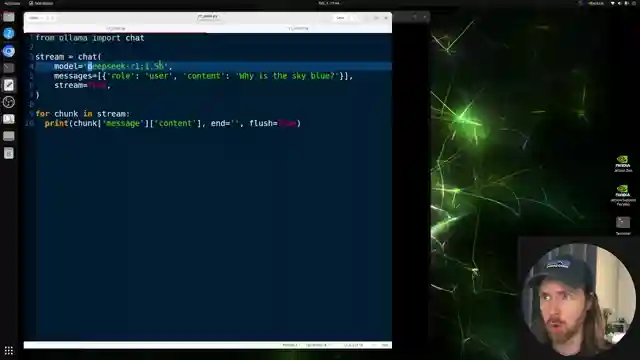

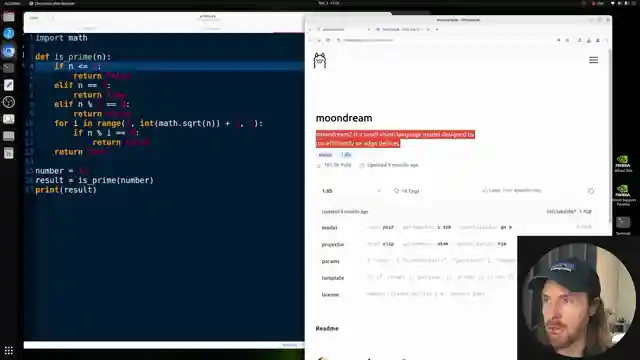

Switching gears, the team cranks up the power settings to unleash the full potential of the 1.5b model, witnessing a dramatic increase in token speed that will leave you on the edge of your seat. As they delve into the world of Python code on the Jetson, importing from AMA and testing prime number detection, the adrenaline reaches a fever pitch. But they don't stop there - combining the Moon dream image model with deep Seek 1.5, they embark on a mind-bending journey of image analysis that will make your jaw drop.

With a devil-may-care attitude, the team fearlessly pushes the boundaries by running the deep Seek model in a browser on the Jetson, proving that this device is not just a toy but a powerful tool for AI exploration. The browser hums to life, showcasing the seamless integration of chat GPT and leaving viewers in awe of the endless possibilities. And as the episode draws to a close, the team hints at an exciting giveaway for channel members, inviting viewers to join in on the high-octane action. So buckle up, hold on tight, and get ready to experience the thrill of AI exploration like never before!

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch DeepSeek R1 Running On 15W | NVIDIA Jetson Orin Nano SUPER on Youtube

Viewer Reactions for DeepSeek R1 Running On 15W | NVIDIA Jetson Orin Nano SUPER

Affordable hardware running up to 600 billion para model

Sponsored video disclaimer suggestion

Not impressed by performance due to memory limit

Comparison of performance between Jetson and other setups

Concerns about the lack of speed for most LLM applications

Curiosity about different models' speeds on 25W

Difference in performance between micro SD card and NVMe SSD

Nvidia Jetson Orin Nano as default in schools

Deepseek 7b model compared to human's cat

Comparison of running models on different setups

Related Articles

Introducing Gemini CLI: Google's Free AI Agent for Developers

Google's Gemini CLI, a new open-source AI agent, competes with cloud code, offering 60 free model requests per minute. Despite some speed and connectivity issues, it presents a viable option for developers seeking a competitive edge in project development.

Boost Sales with V3 AI Tools: A Marketing Guide for Developers

Learn how the All About AI creator leveraged V3 AI tools to boost traffic and sales for their video course. Discover efficient ad creation techniques using AI prompts and services, highlighting the power of AI in modern marketing for software developers and entrepreneurs.

AI-Powered Business Creation: From Idea to Launch in 24 Hours

Learn how All About AI built a business in a day using AI tools like cloud code and Google's V3 model for marketing. From idea generation to ad creation, witness the power of AI in rapid business development.

AI Video Showdown: Hilu 02 vs. Google V3 Comparison

Miniax Hilu 02 outshines Google V3 in AI video comparisons. Explore the impressive image quality and clarity of Hilu 2 in various scenarios, setting new standards in AI video production. Discover the competitive landscape and opportunities for learning on AI videocourse.com.