Hosting DeepSeek AI with Cloud Run GPUs: Flexibility and Scalability

- Authors

- Published on

- Published on

In this thrilling episode from Google Cloud Tech, the formidable Lisa takes us on a high-octane ride through the world of hosting the powerful DeepSeek AI model using Cloud Run GPUs. With the precision of a seasoned race car driver, Lisa demonstrates how Cloud Run offers unparalleled flexibility for coding in various languages and libraries, all neatly packaged into containers. The adrenaline-inducing Cloud shell in the Google Cloud project becomes Lisa's playground, allowing her to experiment and manage projects effortlessly.

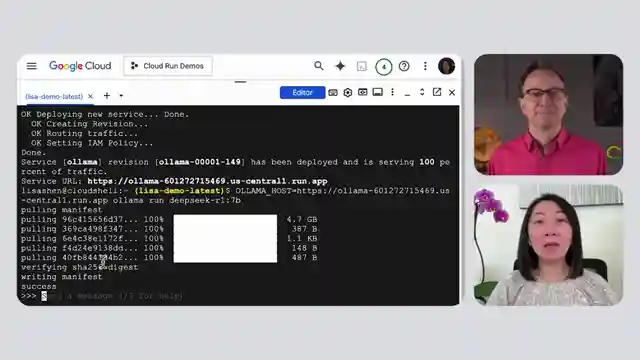

With the installation of a command line tool, Lisa kicks things into high gear, making it a breeze to download and run large language models like the impressive DeepSeek. Deploying the OAMA container as a new Cloud Run service, Lisa showcases the seamless process of loading models from the internet on demand, all while harnessing the power of GPUs for maximum performance. The excitement peaks as Lisa tests the DeepSeek model, effortlessly setting up the host environment variable and running the Oama tool to download the massive 5GB model, showcasing the sheer simplicity of integrating the model into Cloud Run.

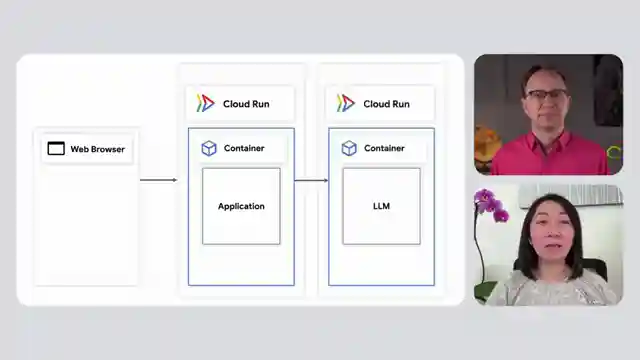

Lisa's mastery extends to utilizing Google's Vertex API for Cloud Run services, offering a hassle-free solution without the complexities of managing GPUs. Whether opting for pre-built models or crafting custom ones, Lisa demonstrates how Cloud Run provides the ideal platform for running AI applications with unparalleled control and flexibility. The episode culminates in a crescendo of scalability discussions, highlighting how Cloud Run dynamically handles traffic spikes with automatic instance scaling, ensuring optimal performance without idle resource wastage. Lisa's expertise shines as she navigates the nuances of loading models in production applications, guiding viewers through the various methods available, each with its unique advantages and considerations.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch How to host DeepSeek with Cloud Run GPUs in 3 steps on Youtube

Viewer Reactions for How to host DeepSeek with Cloud Run GPUs in 3 steps

Viewer found the video insightful

Concerns raised about loading model in memory and potential issues with Cloud Run

Viewer found the video helpful

Comment about the speakers being smooth

Positive emoji reaction 🌺🇹🇭🌺👍

Related Articles

Mastering Real-World Cloud Run Services with FastAPI and Muslim

Discover how Google developer expert Muslim builds real-world Cloud Run services using FastAPI, uvicorn, and cloud build. Learn about processing football statistics, deployment methods, and the power of FastAPI for seamless API building on Cloud Run. Elevate your cloud computing game today!

The Agent Factory: Advanced AI Frameworks and Domain-Specific Agents

Explore advanced AI frameworks like Lang Graph and Crew AI on Google Cloud Tech's "The Agent Factory" podcast. Learn about domain-specific agents, coding assistants, and the latest updates in AI development. ADK v1 release brings enhanced features for Java developers.

Simplify AI Integration: Building Tech Support App with Large Language Model

Google Cloud Tech simplifies AI integration by treating it as an API. They demonstrate building a tech support app using a large language model in AI Studio, showcasing code deployment with Google Cloud and Firebase hosting. The app functions like a traditional web app, highlighting the ease of leveraging AI to enhance user experiences.

Nvidia's Small Language Models and AI Tools: Optimizing On-Device Applications

Explore Nvidia's small language models and AI tools for on-device applications. Learn about quantization, Nemo Guardrails, and TensorRT for optimized AI development. Exciting advancements await in the world of AI with Nvidia's latest hardware and open-source frameworks.