Google's Gemini 2.0: Revolutionizing AI Accessibility

- Authors

- Published on

- Published on

In a bold move that has sent shockwaves through the AI industry, Google has unleashed Gemini 2.0, a cutting-edge AI model that is turning heads and raising eyebrows. This revolutionary model, offered for free during its beta phase, is a game-changer that challenges the status quo and democratizes access to Advanced AI tools. While the competition, like OpenAI, has long locked such capabilities behind expensive paywalls, Google's approach is a breath of fresh air, making high-level AI accessible to all, not just the elite few who can afford it. This move is not just about cost; it's about leveling the playing field and changing the game for who can harness the power of AI.

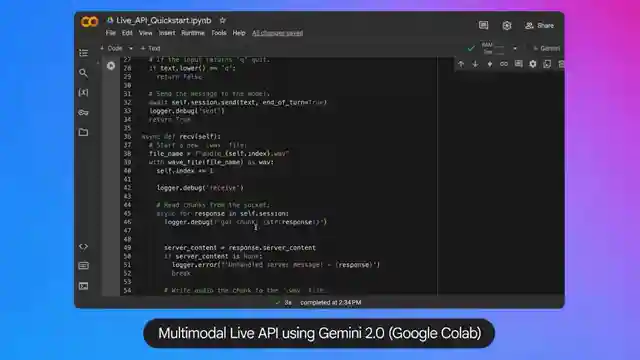

Gemini 2.0 isn't just another run-of-the-mill AI model; it's a powerhouse that can handle extensive workloads with unparalleled efficiency. With features like reasoning capability, advanced multimodality, and native code execution, Gemini 2.0 is a Swiss Army knife for developers and tech enthusiasts alike. Its transparency in decision-making sets it apart, building trust and reliability in a field where blackbox systems have long been the norm. Google's commitment to transparency and innovation shines through with Gemini 2.0, pushing boundaries and setting new standards in the AI landscape.

The implications of Gemini 2.0's release are profound, signaling a shift towards a more inclusive and competitive AI environment. By making high-performing AI tools freely available, Google is empowering startups, researchers, and small businesses to tap into the potential of Advanced AI without breaking the bank. However, challenges lie ahead, as benchmark scores don't always guarantee flawless real-world performance, and convincing Enterprises to embrace a free model may prove to be an uphill battle. The AI arms race is heating up, with Google's bold move forcing competitors to rethink their strategies and adapt to a new era of AI accessibility and transparency.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Google Releases FREE Gemini 2.0 Flash Thinking Model To Crush OpenAI’s Paid Plans! on Youtube

Viewer Reactions for Google Releases FREE Gemini 2.0 Flash Thinking Model To Crush OpenAI’s Paid Plans!

A.I. impact on handmade products

Google's involvement in building trains and subways in the US

Speculation on Google's pricing strategy for new technology

The potential correction in the AI chip market due to the emergence of DeepSeek AI

The implications of DeepSeek's appearance on the tech world

The importance of software and algorithmic innovation in the AI field

Potential savings for American consumers and businesses in the AI market

Concerns and arguments about the dominance of Western chip providers

The long-term effects of DeepSeek's emergence on the AI chip market

The positive outcomes and potential benefits of DeepSeek's impact on the AI ecosystem

Related Articles

Unveiling Deceptive AI: Anthropic's Breakthrough in Ensuring Transparency

Anthropic's research uncovers hidden objectives in AI systems, emphasizing the importance of transparency and trust. Their innovative methods reveal deceptive AI behavior, paving the way for enhanced safety measures in the evolving landscape of artificial intelligence.

Unveiling Gemini 2.5 Pro: Google's Revolutionary AI Breakthrough

Discover Gemini 2.5 Pro, Google's groundbreaking AI release outperforming competitors. Free to use, integrated across Google products, excelling in benchmarks. SEO-friendly summary of AI Uncovered's latest episode.

Revolutionizing AI: Abacus AI Deep Agent Pro Unleashed!

Abacus AI's Deep Agent Pro revolutionizes AI tools, offering persistent database support, custom domain deployment, and deep integrations at an affordable $20/month. Experience the future of AI innovation today.

Unveiling the Dangers: AI Regulation and Threats Across Various Fields

AI Uncovered explores the need for AI regulation and the dangers of autonomous weapons, quantum machine learning, deep fake technology, AI-driven cyber attacks, superintelligent AI, human-like robots, AI in bioweapons, AI-enhanced surveillance, and AI-generated misinformation.