Enhance AI Workloads with GK Inference Gateway

- Authors

- Published on

- Published on

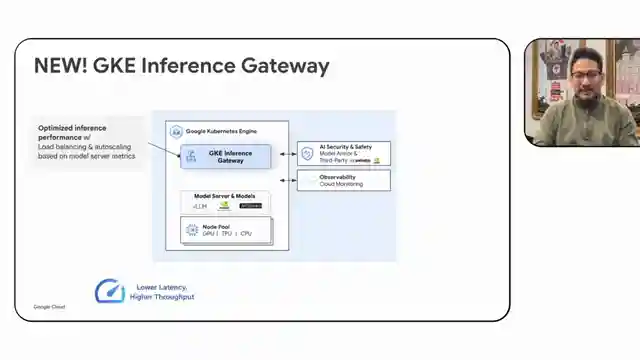

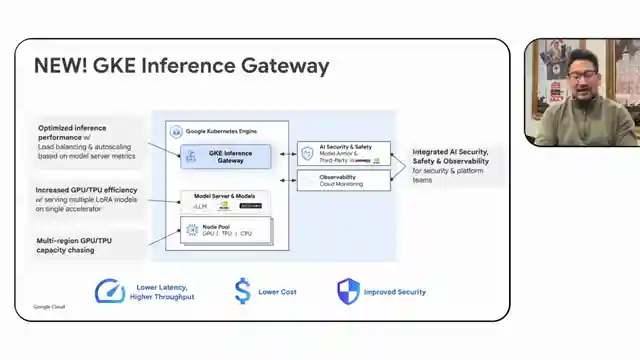

In this thrilling episode from Google Cloud Tech, the team unveils the GK inference gateway, a revolutionary tool designed to supercharge LM workload serving. This gateway is like the secret weapon every AI enthusiast dreams of, offering cutting-edge features like tailored load balancing for LM workloads and autoscaling based on model server metrics. Picture this: packing multiple models on a shared GPU TPU pool to optimize capacity and lower costs. It's like having a turbo boost for your AI projects!

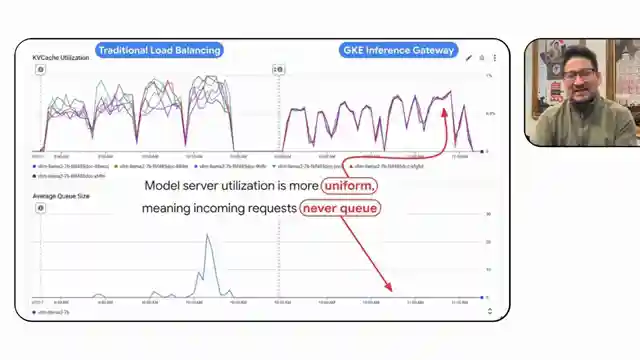

But wait, there's more! The GK inference gateway doesn't just stop at performance optimization. It's your knight in shining armor against cyber threats, integrating AI security guardrails to protect your precious models from potential attacks. And here's the best part - it routes traffic to the least utilized GPU for maximum efficiency, ensuring a smooth and seamless user experience even under heavy loads. It's like having a personal bodyguard for your AI models!

Now, imagine a world where capacity constraints and regional issues are a thing of the past. With the GK inference gateway, you can pull GPU TPU capacity from multiple Google Cloud regions, making your AI services resilient to demand surges and regional capacity issues. It's like having a teleportation device for your AI resources! And the best part? This gateway is open and extensible, allowing you to customize it to fit your unique needs. So, buckle up and get ready to experience the future of AI inference with the GK inference gateway from Google Cloud Tech. It's time to take your AI projects to the next level!

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Optimize model serving with GKE Inference Gateway on Youtube

Viewer Reactions for Optimize model serving with GKE Inference Gateway

I'm sorry, but I need the specific comments from the video in order to generate a summary. Please provide the comments for me to work on.

Related Articles

Mastering Real-World Cloud Run Services with FastAPI and Muslim

Discover how Google developer expert Muslim builds real-world Cloud Run services using FastAPI, uvicorn, and cloud build. Learn about processing football statistics, deployment methods, and the power of FastAPI for seamless API building on Cloud Run. Elevate your cloud computing game today!

The Agent Factory: Advanced AI Frameworks and Domain-Specific Agents

Explore advanced AI frameworks like Lang Graph and Crew AI on Google Cloud Tech's "The Agent Factory" podcast. Learn about domain-specific agents, coding assistants, and the latest updates in AI development. ADK v1 release brings enhanced features for Java developers.

Simplify AI Integration: Building Tech Support App with Large Language Model

Google Cloud Tech simplifies AI integration by treating it as an API. They demonstrate building a tech support app using a large language model in AI Studio, showcasing code deployment with Google Cloud and Firebase hosting. The app functions like a traditional web app, highlighting the ease of leveraging AI to enhance user experiences.

Nvidia's Small Language Models and AI Tools: Optimizing On-Device Applications

Explore Nvidia's small language models and AI tools for on-device applications. Learn about quantization, Nemo Guardrails, and TensorRT for optimized AI development. Exciting advancements await in the world of AI with Nvidia's latest hardware and open-source frameworks.