Enhance AI Security with Model Armor: Google Cloud Tech

- Authors

- Published on

- Published on

In this riveting episode of Google Cloud Tech, we delve into the world of Model Armor, a cutting-edge security layer for generative AI that's here to save the day. Picture this: prompt injection and jailbreaking are the villains, trying to wreak havoc on your AI models. But fear not, Model Armor is the superhero we need, swooping in to protect against unauthorized actions, data exposure, and malicious prompts. It's like having a trusty bodyguard for your AI, ensuring that only safe and authorized content interacts with your precious models.

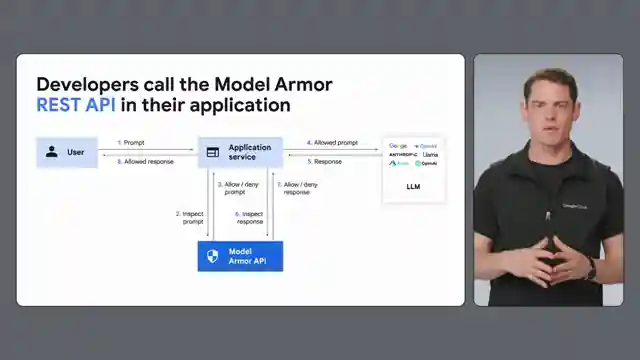

With Model Armor on the scene, developers can breathe easy knowing that their AI applications are safeguarded against potential threats. From scaling protection policies to multiple models to integrating seamlessly with existing workloads, Model Armor offers a range of deployment options to suit every need. Not to mention the granular control it provides over model interactions, allowing users to fine-tune settings and gain valuable insights from analytics. It's like having the keys to a high-performance sports car, giving you the power to navigate through the twists and turns of AI security with ease.

But the real magic happens when we witness Model Armor in action during a live demo. From detecting unsafe prompts to thwarting jailbreaking attempts and malicious URLs, this security layer proves its mettle time and time again. It's a thrilling display of technology at its finest, showcasing how Model Armor can protect against sensitive data exposure and breaches with precision and efficiency. So, if you're ready to take your AI projects to the next level securely, look no further than Model Armor. Sign into the Google Cloud Console and gear up to defend your AI models like never before. Thank you, and drive safe!

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Model Armor: Protecting Generative AI from Threats and Misuse on Youtube

Viewer Reactions for Model Armor: Protecting Generative AI from Threats and Misuse

Security is crucial in the AI world

The video provides a nice explanation

Related Articles

Mastering Real-World Cloud Run Services with FastAPI and Muslim

Discover how Google developer expert Muslim builds real-world Cloud Run services using FastAPI, uvicorn, and cloud build. Learn about processing football statistics, deployment methods, and the power of FastAPI for seamless API building on Cloud Run. Elevate your cloud computing game today!

The Agent Factory: Advanced AI Frameworks and Domain-Specific Agents

Explore advanced AI frameworks like Lang Graph and Crew AI on Google Cloud Tech's "The Agent Factory" podcast. Learn about domain-specific agents, coding assistants, and the latest updates in AI development. ADK v1 release brings enhanced features for Java developers.

Simplify AI Integration: Building Tech Support App with Large Language Model

Google Cloud Tech simplifies AI integration by treating it as an API. They demonstrate building a tech support app using a large language model in AI Studio, showcasing code deployment with Google Cloud and Firebase hosting. The app functions like a traditional web app, highlighting the ease of leveraging AI to enhance user experiences.

Nvidia's Small Language Models and AI Tools: Optimizing On-Device Applications

Explore Nvidia's small language models and AI tools for on-device applications. Learn about quantization, Nemo Guardrails, and TensorRT for optimized AI development. Exciting advancements await in the world of AI with Nvidia's latest hardware and open-source frameworks.