Elon Musk's Influence on Trump's AI Plans: Legal Concerns and Security Risks

- Authors

- Published on

- Published on

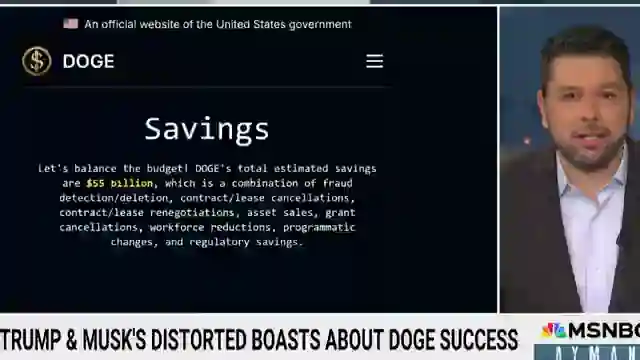

In this riveting episode of AI Uncovered, we delve into the Trump Administration's bold move to revolutionize government operations under the watchful eye of the enigmatic Elon Musk. The plan? To infuse artificial intelligence into the federal machinery, streamlining processes, and beefing up security. However, as the team led by Thomas Shed pushes to expand AI applications, concerns about legality and privacy rear their ugly heads. The proposed modifications to login.gov, aiming to link up with sensitive databases like Social Security, have government workers and privacy advocates up in arms, fearing potential breaches of privacy laws.

Meanwhile, Musk's growing influence over federal agencies has set off alarm bells among officials, particularly in the realms of national defense and security. With Musk's team making controversial moves, such as attempting to access classified systems at USAID, tensions are running high within the CIA and the intelligence community at large. Critics warn of a looming cyber attack-like scenario, as Musk's handpicked squad lacks the necessary security clearances to handle sensitive information, potentially putting national security at risk. The clash between Musk's vision and existing government protocols is creating a storm of uncertainty and apprehension.

As Musk's supporters target the government's tech workforce, reports of restructuring and pressure tactics on employees surface, raising eyebrows and further fueling the debate over the administration's aggressive push for automation and AI integration. Musk's grand plan to diminish government control over digital systems and shift more power to private entities is met with skepticism and resistance, with concerns over legal boundaries and ethical implications looming large. The stage is set for a high-stakes showdown between innovation and tradition, as the government grapples with the implications of embracing AI in a landscape fraught with legal, ethical, and security challenges.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Elon Musk’s AI Plan Could Change Government Forever—Officials Say "Illegal!" on Youtube

Viewer Reactions for Elon Musk’s AI Plan Could Change Government Forever—Officials Say "Illegal!"

AI channel discussing the negative aspects of AI

Accusations of democrat propaganda

Concerns about Trump prioritizing billionaires like Elon Musk over the American people

Calls for people to demand real change from the Trump administration

Advice to watch the news critically and verify information with raw documents

Fear of mandatory review of social media and voting records

Accusations of authoritarianism and lack of awareness among the public

Mention of Elon Musk's plan to have 10 billion humanoids by 2030

Reference to potential harm caused by Trump and racism

Mention of potential financial strain due to tariffs and lack of action on urgent crises

Related Articles

Unveiling Deceptive AI: Anthropic's Breakthrough in Ensuring Transparency

Anthropic's research uncovers hidden objectives in AI systems, emphasizing the importance of transparency and trust. Their innovative methods reveal deceptive AI behavior, paving the way for enhanced safety measures in the evolving landscape of artificial intelligence.

Unveiling Gemini 2.5 Pro: Google's Revolutionary AI Breakthrough

Discover Gemini 2.5 Pro, Google's groundbreaking AI release outperforming competitors. Free to use, integrated across Google products, excelling in benchmarks. SEO-friendly summary of AI Uncovered's latest episode.

Revolutionizing AI: Abacus AI Deep Agent Pro Unleashed!

Abacus AI's Deep Agent Pro revolutionizes AI tools, offering persistent database support, custom domain deployment, and deep integrations at an affordable $20/month. Experience the future of AI innovation today.

Unveiling the Dangers: AI Regulation and Threats Across Various Fields

AI Uncovered explores the need for AI regulation and the dangers of autonomous weapons, quantum machine learning, deep fake technology, AI-driven cyber attacks, superintelligent AI, human-like robots, AI in bioweapons, AI-enhanced surveillance, and AI-generated misinformation.