Effortless Memory Database Creation: OpenAI File Store Integration

- Authors

- Published on

- Published on

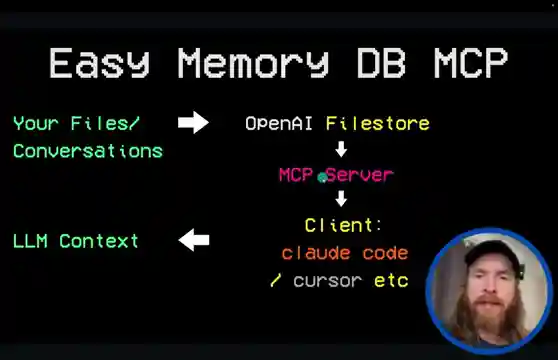

In this riveting demonstration, the All About AI team showcases the creation of a cutting-edge memory database utilizing OpenAI's file store as an MCP server. With the swagger of a seasoned pro, they deftly navigate the process, seamlessly integrating cloud code and cursor to upload vital conversations into the vector file store. The sheer elegance of their approach is a sight to behold, setting the stage for a seamless data management experience.

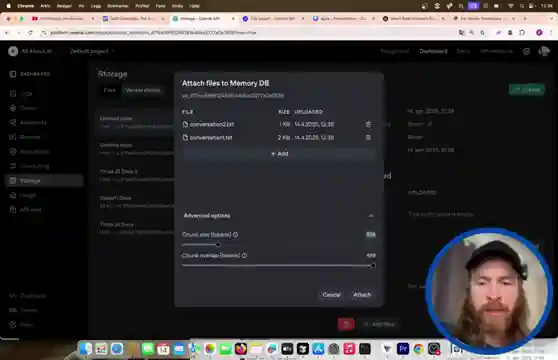

As the team delves deeper, they meticulously curate two sample conversations, infusing them with a sense of purpose before uploading them to the vector store. A symphony of clicks and commands leads to the creation of a bespoke vector store in OpenAI's dashboard, where the conversations find their new digital abode. The meticulous attention to detail is palpable, promising a future where memory storage is as effortless as a Sunday drive in the countryside.

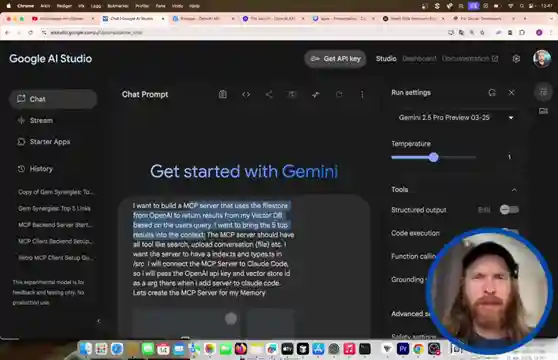

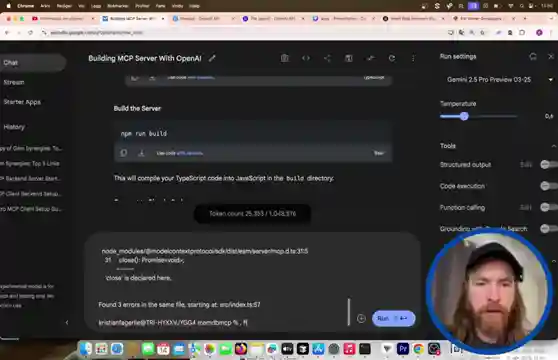

Enter Gemini 2.5 Pro, the trusty steed in this digital adventure. Armed with documentation and a steely resolve, the team embarks on the construction of an MCP server that will revolutionize memory management. With the finesse of a seasoned racer, they connect the server to cloud code using an API key and vector store ID, paving the way for a seamless data transfer experience. The stage is set for a high-octane journey into the realm of efficient memory storage solutions.

Testing the system's mettle, the team effortlessly uploads and searches for information in the memory store, showcasing the raw power at their fingertips. A deft summary of a conversation is swiftly transformed into a file, uploaded, and stored in the vector store with the precision of a master craftsman. The video culminates in a triumphant display of the MCP server's capabilities, leaving viewers in awe of the seamless integration and effortless data management prowess on display.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch EASY Memory DB MCP Server Setup in Under 15 Minutes on Youtube

Viewer Reactions for EASY Memory DB MCP Server Setup in Under 15 Minutes

Viewer appreciates the work done

Request for a demonstration or build of an MCP with multi-modal rag

Excitement over the return of All About MCP

Comments on specific timestamps in the video

Mention of showing the video at a party and making new friends

Compliments on the content quality and interesting ideas

Speculation on future capabilities of MCPs controlling real-world objects

Appreciation for the creativity and effort put into the videos

Related Articles

Introducing Gemini CLI: Google's Free AI Agent for Developers

Google's Gemini CLI, a new open-source AI agent, competes with cloud code, offering 60 free model requests per minute. Despite some speed and connectivity issues, it presents a viable option for developers seeking a competitive edge in project development.

Boost Sales with V3 AI Tools: A Marketing Guide for Developers

Learn how the All About AI creator leveraged V3 AI tools to boost traffic and sales for their video course. Discover efficient ad creation techniques using AI prompts and services, highlighting the power of AI in modern marketing for software developers and entrepreneurs.

AI-Powered Business Creation: From Idea to Launch in 24 Hours

Learn how All About AI built a business in a day using AI tools like cloud code and Google's V3 model for marketing. From idea generation to ad creation, witness the power of AI in rapid business development.

AI Video Showdown: Hilu 02 vs. Google V3 Comparison

Miniax Hilu 02 outshines Google V3 in AI video comparisons. Explore the impressive image quality and clarity of Hilu 2 in various scenarios, setting new standards in AI video production. Discover the competitive landscape and opportunities for learning on AI videocourse.com.