Deep Seek R1 Model: Unleashing Advanced AI Capabilities

- Authors

- Published on

- Published on

Deep Seek unveiled the R1 light preview model, leaving everyone in awe. This week, they unleashed a whole family of models, including the Deep 60 and distilled models, which outperformed big names like GPT-40. The MIT-licensed Deep Seek R1 model is a game-changer, allowing users to train other models with its outputs. A detailed paper delves into the model's groundbreaking techniques, setting it apart from the competition.

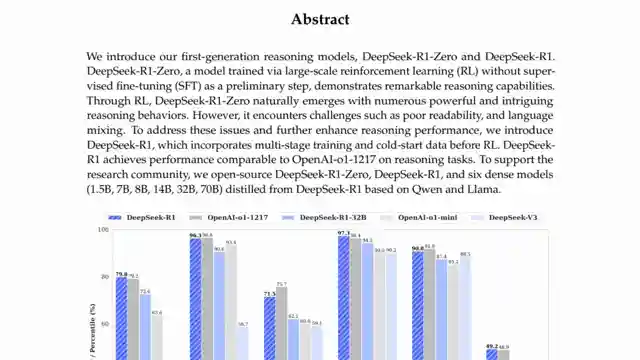

In benchmarks, the Deep Seek R1 model shines, even surpassing the OpenAI 01 model in some instances. Leveraging the Deep Seek V3 base model, the R1 model showcases a unique approach to post-training, yielding exceptional results. The model's performance on the chat.deepseek.com demo app demonstrates its impressive thinking process and reasoning abilities, handling various questions with finesse.

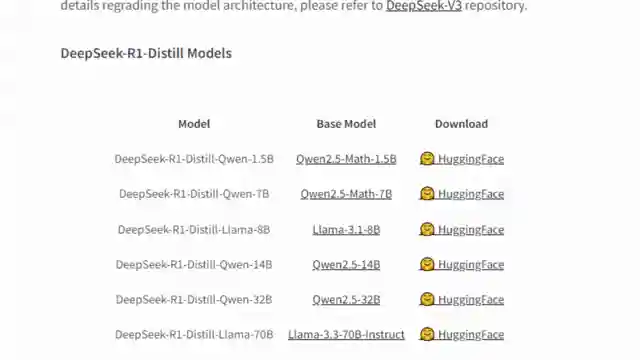

The technical paper reveals the model's evolution, with the Deep Seek R1 benefiting from reinforcement learning training to enhance its capabilities. Through a multi-stage training pipeline, including fine-tuning and reinforcement learning, the model's performance continues to impress. Additionally, distillation techniques have been employed to create smaller models from the Deep Seek R1, showcasing the model's adaptability and versatility in the AI landscape.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch DeepSeekR1 - Full Breakdown on Youtube

Viewer Reactions for DeepSeekR1 - Full Breakdown

The usefulness and technical details of the DeepSeek R1 model are appreciated

Discussion on Generalized Advantage Estimation (GAE) and its relation to adaptive control systems

Mention of the model's multilingual capability and suggestions for testing reasoning

Comments on the model's performance and capabilities compared to other models

Questions about the model's distillation procedure and running distilled models

Praise for the video content and explanation provided

Concerns and comparisons between open-source and proprietary models

Questions about the use of supervised fine-tuning and reinforcement learning in AI development

Comments on political aspects related to China and the U.S.

Speculation on the impact of OpenAI's methods on other AI companies

Related Articles

Unleashing Gemini CLI: Google's Free AI Coding Tool

Discover the Gemini CLI by Google and the Gemini team. This free tool offers 60 requests per minute and 1,000 requests per day, empowering users with AI-assisted coding capabilities. Explore its features, from grounding prompts in Google Search to using various MCPS for seamless project management.

Nanet's OCR Small: Advanced Features for Specialized Document Processing

Nanet's OCR Small, based on Quen 2.5VL, offers advanced features like equation recognition, signature detection, and table extraction. This model excels in specialized OCR tasks, showcasing superior performance and versatility in document processing.

Revolutionizing Language Processing: Quen's Flexible Text Embeddings

Quen introduces cutting-edge text embeddings on HuggingFace, offering flexibility and customization. Ranging from 6B to 8B in size, these models excel in benchmarks and support instruction-based embeddings and reranking. Accessible for local or cloud use, Quen's models pave the way for efficient and dynamic language processing.

Unleashing Chatterbox TTS: Voice Cloning & Emotion Control Revolution

Discover Resemble AI's Chatterbox TTS model, revolutionizing voice cloning and emotion control with 500M parameters. Easily clone voices, adjust emotion levels, and verify authenticity with watermarks. A versatile and user-friendly tool for personalized audio content creation.