Deep Seek R1: Mastering AI Serving with 545% Profit Margin

- Authors

- Published on

- Published on

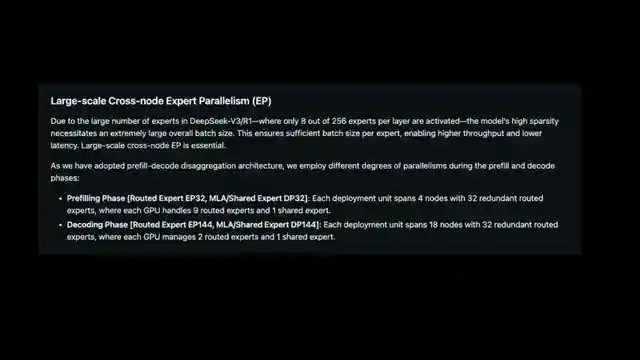

Deep Seek R1, a marvel of AI engineering, boasts an impressive profit margin of 545%, raking in a jaw-dropping $560,000 in daily revenue while only shelling out $887,000 for GPU costs. This isn't your run-of-the-mill Transformer model; oh no, it's a sophisticated blend of expert and Ane models, with 256 experts per layer ensuring specialized and efficient computation. By cleverly implementing expert parallelism across nodes, Deep Seek R1 maximizes GPU usage, handling a massive influx of tokens with ease.

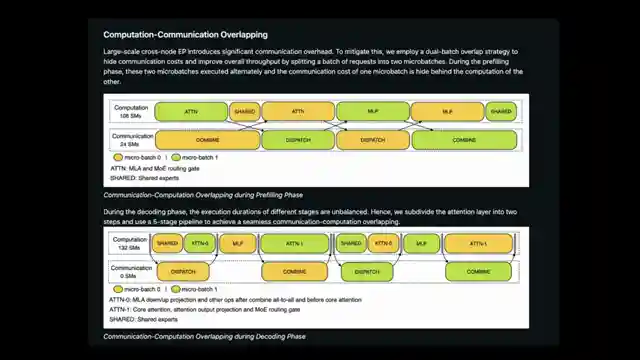

But wait, there's more! Deep Seek R1 doesn't stop there. They've fine-tuned their system with dual micro-batching during the pre-filling stage and a five-stage pipeline during decoding, keeping those GPUs busy round the clock. Load balancing? They've got it covered with pre-fill, decode, and expert parallel load balancers, ensuring each GPU pulls its weight without breaking a sweat. Picture this: a single node with eight GPUs sustaining a whopping 73,740 tokens per second during pre-filling and 14,800 tokens during decoding. Multiply that by hundreds of nodes, and you've got yourself a powerhouse of AI inference.

In the world of Deep Seek R1, communication overlap is key. By skillfully coordinating micro-batches and pipeline stages, they keep those GPUs churning away, delivering top-notch performance. And let's not forget their strategic use of fp8 and bf16 for matrix multiplication and core MLA computations, striking a perfect balance between speed and precision. With a peak of 268 GPU nodes and a daily cost of just $887,000, Deep Seek R1 is a masterclass in AI serving, leaving Western companies green with envy. It's not just AI; it's art, it's science, it's Deep Seek R1.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch DeepSeek R1 Official Profit Margin is pure insanity on Youtube

Viewer Reactions for DeepSeek R1 Official Profit Margin is pure insanity

Breakdown of the $87,000 costs per day

Request for a DeepSeek Coder v3 with a small footprint

Question about the costs of renting GPUs or operating owned GPUs

Comparison between DeepSeek and OpenAI

Mention of DeepSeek being the best AI team in the world

Concern about server busy issues

Comment on ChatGPT not running profitably due to high tech salaries

Appreciation for the economic information provided in the video

Typo in the System Design link

Appreciation for the overview provided in the video

Related Articles

Revolutionizing Music Creation: Google's Magenta Real Time Model

Discover Magenta, a cutting-edge music generation model from Google deep mind. With 800 million parameters, Magenta offers real-time music creation on Google Collab TPU. Available on Hugging Face, this AI innovation is revolutionizing music production.

Nanits OCRS Model: Free Optical Character Recognition Tool Outshines Competition

Discover Nanits' OCRS model, a powerful optical character recognition tool fine-tuned from Quinn 2.5 VLM. This free model outshines Mistral AI's paid OCR API, excelling in latex equation recognition, image description, signature detection, and watermark extraction. Accessible via Google Collab, it offers seamless conversion of documents to markdown format. Experience the future of OCR technology with Nanits.

Revolutionizing Voice Technology: Chatterbox by Resemble EI

Resemble EI's Chatterbox, a half-billion parameter model licensed under MIT, excels in text-to-speech and voice cloning. Users can adjust parameters like pace and exaggeration for customized output. The model outperforms competitors, making it ideal for diverse voice applications. Subscribe to 1littlecoder for more insights.

Unlock Productivity: Google AI Studio's Branching Feature Revealed

Discover the hidden Google AI studio feature called branching on 1littlecoder. This revolutionary tool allows users to create different conversation timelines, boosting productivity and enabling flexible communication. Branching is a game-changer for saving time and enhancing learning experiences.