Building a Custom mCP Client: Enhancing User Experience with Voice Responses

- Authors

- Published on

- Published on

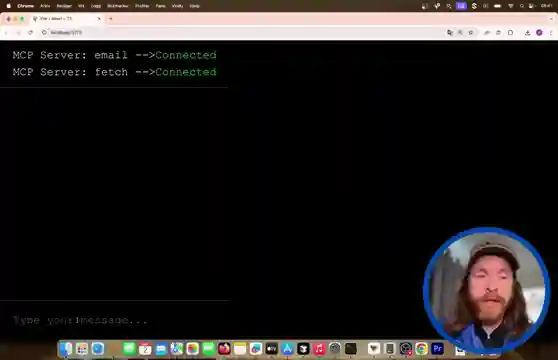

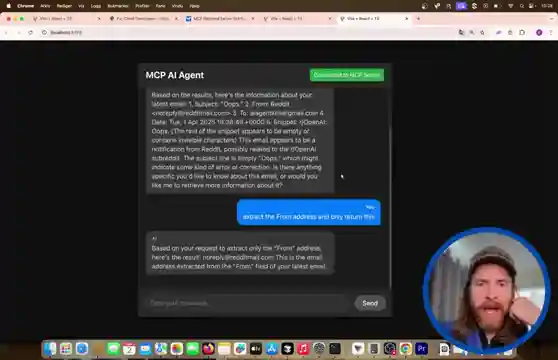

In this riveting episode, the All About AI team embarks on a daring mission to construct their very own mCP client, a feat not for the faint of heart. With servers humming and connections established, they dive headfirst into fetching emails and information from URLs, showcasing their technical prowess. A bold move is made as they fire off an email to Chris about Vibe coding, setting the stage for an epic coding adventure.

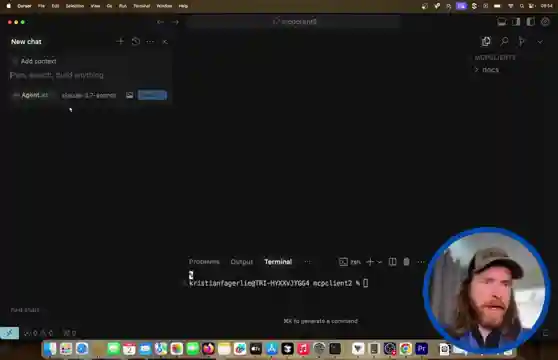

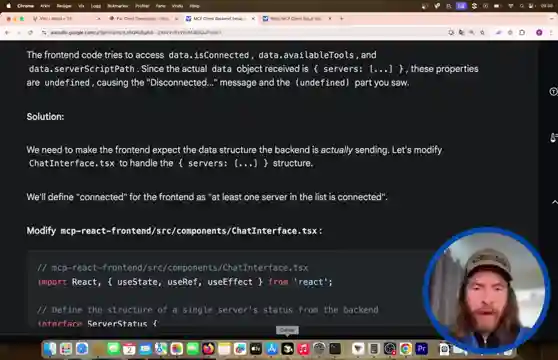

Undeterred by challenges, the team meticulously crafts the project structure and tackles backend server initialization with unwavering determination. Despite facing minor setbacks, their relentless spirit sees them through, ultimately achieving success in running both backend and frontend servers seamlessly. Through clever modifications, they enhance the chat interface to handle complex server structures, paving the way for a more interactive user experience.

As the journey progresses, the team delves into the realm of contextual memory, enabling the client to respond intelligently and engage in follow-up conversations. A game-changing moment arises as they integrate the open AI text-to-speech model, bringing a whole new dimension to their client with captivating voice responses. With a keen focus on user experience, they streamline responses, delivering concise and impactful information to users, revolutionizing the client's functionality.

In a grand finale, the team showcases the client's prowess by effortlessly sending emails and receiving succinct summaries through dynamic voice responses. Their innovative approach not only demonstrates technical prowess but also underscores the immense possibilities of creating a personalized local client. With a nod to customization and cost control, the team leaves viewers inspired to chart their own path in the ever-evolving landscape of AI development.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Build a MCP Client with Gemini 2.5 Pro: Here's How on Youtube

Viewer Reactions for Build a MCP Client with Gemini 2.5 Pro: Here's How

Gemini 2.5 added to Cursor

Comparison with other lightweight alternatives suggested for future videos

Inquiry about MCP server capability with swagger file for LLM API

Mention of paid version of Cursor for API keys

Appreciation for the varied and fascinating videos

Comment in German about favorite snack position

Viewer expressing long-time support and appreciation for content variety

Related Articles

Introducing Gemini CLI: Google's Free AI Agent for Developers

Google's Gemini CLI, a new open-source AI agent, competes with cloud code, offering 60 free model requests per minute. Despite some speed and connectivity issues, it presents a viable option for developers seeking a competitive edge in project development.

Boost Sales with V3 AI Tools: A Marketing Guide for Developers

Learn how the All About AI creator leveraged V3 AI tools to boost traffic and sales for their video course. Discover efficient ad creation techniques using AI prompts and services, highlighting the power of AI in modern marketing for software developers and entrepreneurs.

AI-Powered Business Creation: From Idea to Launch in 24 Hours

Learn how All About AI built a business in a day using AI tools like cloud code and Google's V3 model for marketing. From idea generation to ad creation, witness the power of AI in rapid business development.

AI Video Showdown: Hilu 02 vs. Google V3 Comparison

Miniax Hilu 02 outshines Google V3 in AI video comparisons. Explore the impressive image quality and clarity of Hilu 2 in various scenarios, setting new standards in AI video production. Discover the competitive landscape and opportunities for learning on AI videocourse.com.