AI Frontend Challenges: CLA vs. GPT vs. OpenAI - A Comparative Analysis

- Authors

- Published on

- Published on

In a thrilling display of technological prowess, the team embarked on a series of frontend simulation challenges to put CLA through its paces. The first task, an animated weather card, showcased CLA's ability to bring to life elements like wind, rain, sun, and snow with remarkable detail. A comparison with Chat GPT 03 Mini High revealed a chasm in output quality, akin to asking a Kindergarten kid to draw against a seasoned frontend developer. Moving on, CLA was tasked with revamping a Sudoku game, delivering different levels, timers, and hints with precision.

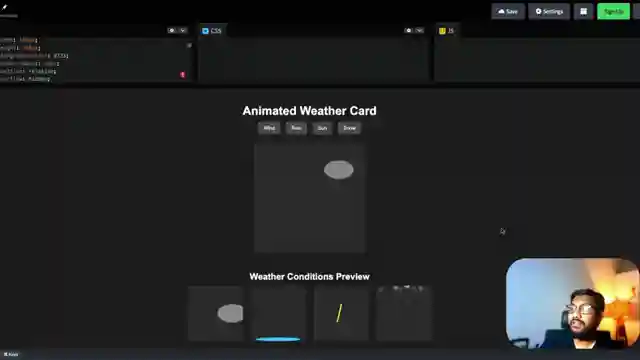

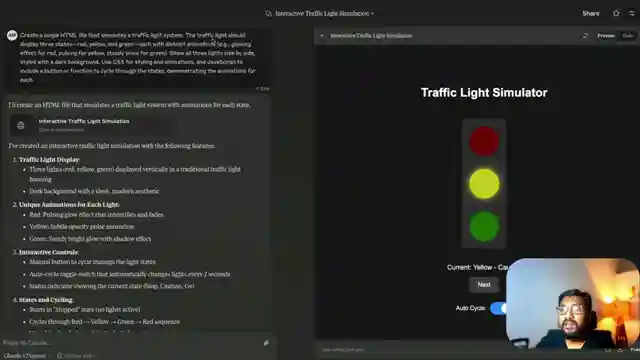

The adrenaline-pumping challenge of creating a traffic light simulator pushed CLA to simulate red, yellow, and green states with distinct animations and autocycling. While CLA obediently followed instructions, it lacked the finesse of intelligent algorithms, leaving a void in the simulation's complexity. The climax of the trials unfolded with the creation of an analog clock, demanding manual time adjustments and toggling between dark and light modes. CLA's visually stunning output dazzled the senses, yet fell short in accurately reflecting time changes, a crucial element in clock simulations.

The comparison with OpenAI 01, the flagship model, exposed CLA's Achilles' heel in time accuracy within the clock simulation. Despite this setback, the fan of the channel found the experiments riveting, hinting at future showdowns between CLA 3.7 Sonet and other large language models. The quest for technological supremacy continues, as these trials shed light on the contrasting capabilities of the latest models from Anthropic and OpenAI. The stage is set for more thrilling experiments, promising a rollercoaster ride of innovation and competition in the realm of AI simulation challenges.

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Image copyright Youtube

Watch Did Claude 3.7 Sonnet win it? on Youtube

Viewer Reactions for Did Claude 3.7 Sonnet win it?

Cycle is backwards in the video

Comment on the lighting quality for camera presence

Mention of using AI for coding

Comparison between different AI models for coding

Mention of specific AI models like Claude and Gork 3

Request for testing Claude 4

Question about creating an app and using tools like Claude or GPT for code generation

Positive feedback on the performance improvement with AI

Mention of Google's flagship model Flash 2 Exp

Humorous comment about the thumbnail

Related Articles

Revolutionizing Music Creation: Google's Magenta Real Time Model

Discover Magenta, a cutting-edge music generation model from Google deep mind. With 800 million parameters, Magenta offers real-time music creation on Google Collab TPU. Available on Hugging Face, this AI innovation is revolutionizing music production.

Nanits OCRS Model: Free Optical Character Recognition Tool Outshines Competition

Discover Nanits' OCRS model, a powerful optical character recognition tool fine-tuned from Quinn 2.5 VLM. This free model outshines Mistral AI's paid OCR API, excelling in latex equation recognition, image description, signature detection, and watermark extraction. Accessible via Google Collab, it offers seamless conversion of documents to markdown format. Experience the future of OCR technology with Nanits.

Revolutionizing Voice Technology: Chatterbox by Resemble EI

Resemble EI's Chatterbox, a half-billion parameter model licensed under MIT, excels in text-to-speech and voice cloning. Users can adjust parameters like pace and exaggeration for customized output. The model outperforms competitors, making it ideal for diverse voice applications. Subscribe to 1littlecoder for more insights.

Unlock Productivity: Google AI Studio's Branching Feature Revealed

Discover the hidden Google AI studio feature called branching on 1littlecoder. This revolutionary tool allows users to create different conversation timelines, boosting productivity and enabling flexible communication. Branching is a game-changer for saving time and enhancing learning experiences.